The Rise of GenAI in Citizen Development (and Cybersecurity Challenges That Come With It)

Introduction

Citizen developers, often without a formal background in programming, are harnessing the power of generative AI capabilities to create powerful business applications and automations in low-code/no-code platforms like Microsoft Power Platform, Salesforce, and ServiceNow. While this democratization of software development brings about numerous benefits, it also introduces a host of new cybersecurity risks that organizations must address to safeguard their sensitive data and maintain compliance standards.

The Proliferation of Shadow Application Development

One of the key cybersecurity challenges associated with the rise of citizen developers is the proliferation of shadow application development. Unlike traditional IT projects, which are typically managed by trained professionals, citizen developers may create applications outside the purview of IT departments. In fact, this is part of the appeal, as business users no longer need to bother IT or developer teams with mundane requests; they can now just build things themselves with the power of Generative AI Copilots. However, this lack of visibility can lead to the creation of “shadow applications” that operate independently, often without adhering to the organization’s security protocols.

These shadow applications may access and manipulate critical data without proper oversight, potentially exposing the organization to security vulnerabilities. To mitigate this risk, companies must establish clear policies regarding application development, emphasizing collaboration between citizen developers and IT professionals to ensure that security measures are integrated from the inception of the project.

One of many examples that we see is that teams are building integrations between 3rd party SaaS tools through citizen development. While useful for productivity, it means there is no IT review on how these integrations are built, nor is there intel on what data goes in and out of the company premise.

Unawareness of Apps Interacting with Critical Data

As citizen developers build applications to streamline business processes with simple text prompts or even document scanning, there is a risk of insufficient awareness regarding the apps that interact with critical data. The absence of proper documentation and communication channels between citizen developers and IT departments can result in a lack of understanding about the data these applications handle; which trickles down to the security team.

This poses a significant threat to data security, as sensitive information may be unintentionally exposed or mishandled. Organizations should implement robust communication channels and documentation processes to ensure that citizen developers are aware of the data they are working with, and IT teams can assess and implement appropriate security measures accordingly.

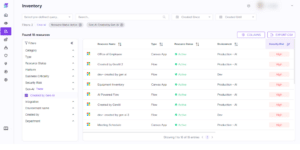

A simple example can be an app that uses Generative AI inside of the application to help end users. Think of an inventory application where end users ask the app (and therefore the GPT model) to get data within the application on who has access to certain things. While useful, if the application maker provided the AI Copilot with excessive access rights, it means that end users might be exposed to, and therefore have access to data they have no reason to see.

Compliance Failures in the Era of Citizen Developers

With the increasing reliance on citizen developers, organizations may face challenges in maintaining compliance with industry regulations and data protection standards. Citizen developers may not have a comprehensive understanding of the legal and regulatory frameworks governing data privacy, leading to unintentional breaches.

To address this issue, companies must invest in training programs that educate citizen developers about compliance requirements and the importance of adhering to data protection standards. Regular audits and assessments can help ensure that the applications developed by citizen developers align with regulatory guidelines, preventing costly legal ramifications and reputational damage.

A simple example to illustrate this would be a user that built an application that unknowingly moves data between regions; thus causing a failure in GDPR compliance.

How to Solve These Problems

The advent of citizen developers leveraging generative AI capabilities has undoubtedly transformed the way businesses approach application development. However, this paradigm shift comes with its set of cybersecurity challenges, including shadow application development, lack of awareness about data interactions, and compliance failures.

Zenity, the world’s first platform for securing and governing citizen development, has recently unveiled some exciting new capabilities to help our customers get a handle on what applications and automations have been created using citizen development. Further, security teams are also equipped with security violation data that is automatically generated against each individual app, automation, connection, or dataflow that is created using Generative AI so they can see the risks in real-time.

To fully harness the power of citizen development and Generative AI (as even the world’s biggest companies are hedging their bets), organizations must prioritize collaboration between citizen developers and IT professionals, establish clear communication channels, and invest in technology that helps govern and secure citizen development. By taking a proactive approach, businesses can harness the innovative potential of citizen developers while safeguarding their sensitive data and maintaining compliance with industry regulations.