Zenity Leads the Charge by Becoming the First to Bring Application Security to Enterprise AI Copilots

What Happened?

Microsoft Ignite 2023 was an eventful one, with many announcements across Microsoft’s AI Copilot capabilities. The biggest announcement, in our opinion, is that of Microsoft Copilot Studio, a low-code tool that allows professional and citizen developers to build standalone AI Copilots, as well as customize Microsoft Copilot for Microsoft 365. These exciting technological advancements allow business users (of all technical backgrounds, mind you) to customize Copilot for Microsoft 365, publish standalone copilots and custom GPTs, and be left to their own devices to manage and secure these customizations and copilots.

While this may seem like the first step of a new Terminator movie with “Skynet” coming for us all, it really is an exciting breakthrough that Microsoft has delivered for its customers. Generative AI Copilots enable more business users to be more productive than ever before and do a lot in the way of bringing people closer to technology. HOWEVER. There are also massive security ramifications that must be accounted for, which is why we are so pleased to announce Zenity as the first security vendor to protect Microsoft Copilot Studio, as well as introduce new capabilities within the Zenity platform that enable security teams to maintain visibility and control over how Generative AI is used throughout the organization.

What’s Different About Copilot Studio?

For starters, Copilot Studio makes it possible for any user to build new AI agents and bots from scratch; adding rocket fuel to both the citizen development movement as well as adoption of Gen AI capabilities. This is also increasingly favored by professional developers as they can now build smarter and more integrated bots with the power to build their own AI Copilots. What this means in practice is that any user can now build, test, and customize their own copilots and GPTs, rather than relying on the centralized GPT platform itself; with no developer knowledge required. Every user gets complete control over their standalone copilots and can do whatever they please.

Anyone can now connect Copilot to enterprise data sources, including pre-built or custom plugins, to tap into any system (think: M365, Azure, SaaS apps, databases, SQL servers, etc.), and build customized solutions that can generate real-time answers. Rather than just creating a chatbot that has pre-canned answers that eventually leave you with “for more information, reach out to the Help Desk,” business users can create adaptive apps and bots that provide answers in real-time based on real and evolving data.

What are the Risks?

There are massive risks with this type of development democratization, which can be boiled down to three main groups; data leakage, over-sharing, and a lack of visibility. To illustrate these risks, let’s walk through an example of how a business user might use Copilot Studio.

Say you’re in accounting and you want to build a bot that can help you stay on top of expense reports that employees are filing. Now, with Copilot Studio, you can build a bot in Power Agents and insert an AI Copilot to be able to accurately give end users up-to-date information when prompted. You, and members of your team can simply open up the bot and ask it “Tell me how much money we have left to spend on office equipment this quarter,” and it will query a database, look through reports, and more to give you an accurate number.

However, in order for the bot to be able to access this information in real-time, it must have access to a SQL database, therefore, it needs your SQL credential to authorize itself. In setting up this bot, the builder has two choices to establish a connection. First, they can configure the connection to force each individual user to bring their own credential to authenticate, or, more frequently, the bot will be built so that the bot builder/owner brings their credentials, and then those are used and shared with every other user of the bot.

The reason the latter is so much more frequent is for a couple reasons. For one, some cases, like SQL servers and Azure connections, will do this by default and citizen developers (and even professional developers) will not know, or be technically capable enough to change it, but also because citizen developers may not know the difference and just take the path of least resistance by entering in their own credentials.

Unfortunately, this results in data leaking, implicitly and overly sharing applications, as well as an overall lack of visibility for security teams.

The Solution

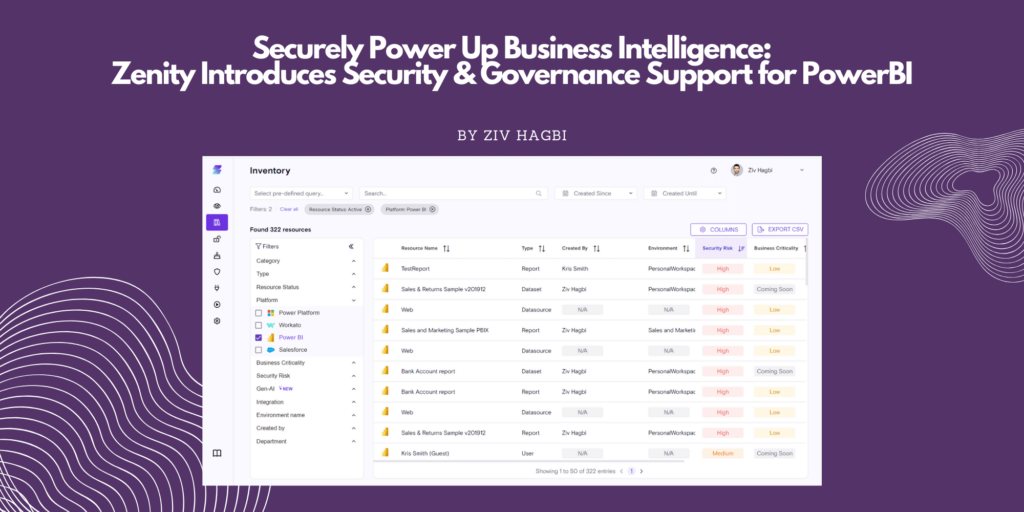

The volume and speed of development continues to accelerate at breakneck speeds, and staying on top of each individual app and connection that is made is harder than ever before. At Zenity, we are proud to announce the first solution that helps organizations gain visibility and control over custom-built GPTs and bots that are used within the enterprise. By extending our security and governance platform to that of Copilot Studio we empower our customers to:

- Gain visibility into all apps, bots, automations, and more that people are creating via Copilot Studio, and now the ability to secure user-built GPTs and AI chatbots as well. Further, Zenity has added to our already leading SBOM capabilities to detect all the different components and connectors that are built into Generative AI Copilots and GPTs; with Microsoft boasting over 1,100 pre-built connectors, and thousands more that are custom built.

- Assess each Copilot for security vulnerabilities. Zenity is able to map vulnerabilities in these new resources to popular security frameworks like the OWASP Top 10 or MITRE to help illuminate which apps contain hard-coded secrets, which are implicitly shared, which leak data, and more.

- Govern proper usage of Generative AI Copilots. With Zenity, customers can now block Copilot from sharing and accessing sensitive business data with unauthenticated users. With Zenity, organizations can fully unleash citizen development by making sure that however people are building their own apps, automations, bots, and connections that they are secure and developed within the defined guardrails of the organization.

The recent closure of our Series A round was done with one eye on how to secure Generative AI, as this is a natural extension and added capability for both professional and citizen developers. We are excited to see how this creates a ripple effect, with more and more software vendors likely following suit to put more Generative AI capabilities into the hands of business users; which requires stringent security that does not block productivity. If you’d like to see this in action, or are experiencing challenges already, reach out to us to see a demo or schedule a call directly with one of our representatives!