Agentic AI

Agentic AI Security & Governance

Empower your workforce with AI agents - securely built, used, and governed.

What makes Agentic AI Unique?

AI Agents can act as humans do. We should secure them accordingly. There are three unique attack vectors to consider.

External Bad Actors

Hackers and attackers take aim at AI agents via indirect prompt injection as they seek access to sensitive data and corporate secrets.

Trusted Insiders

Trusted insiders, whether employees or third parties, may knowingly or unknowingly prompt AI agents to behave in unintended or unauthorized ways.

Curious AI

AI agents can behave as unpredictably as humans, often stepping outside established guardrails by misinterpreting prompts or taking actions out of sequence.

AI agents are everywhere. But so are the security risks.

Type of AI Agents

AI Agents can be purchased off the shelf as a standalone offering, or can be built by business users using low-code platforms. AI Agents can also be declarative or autonomous.

Declarative Agents

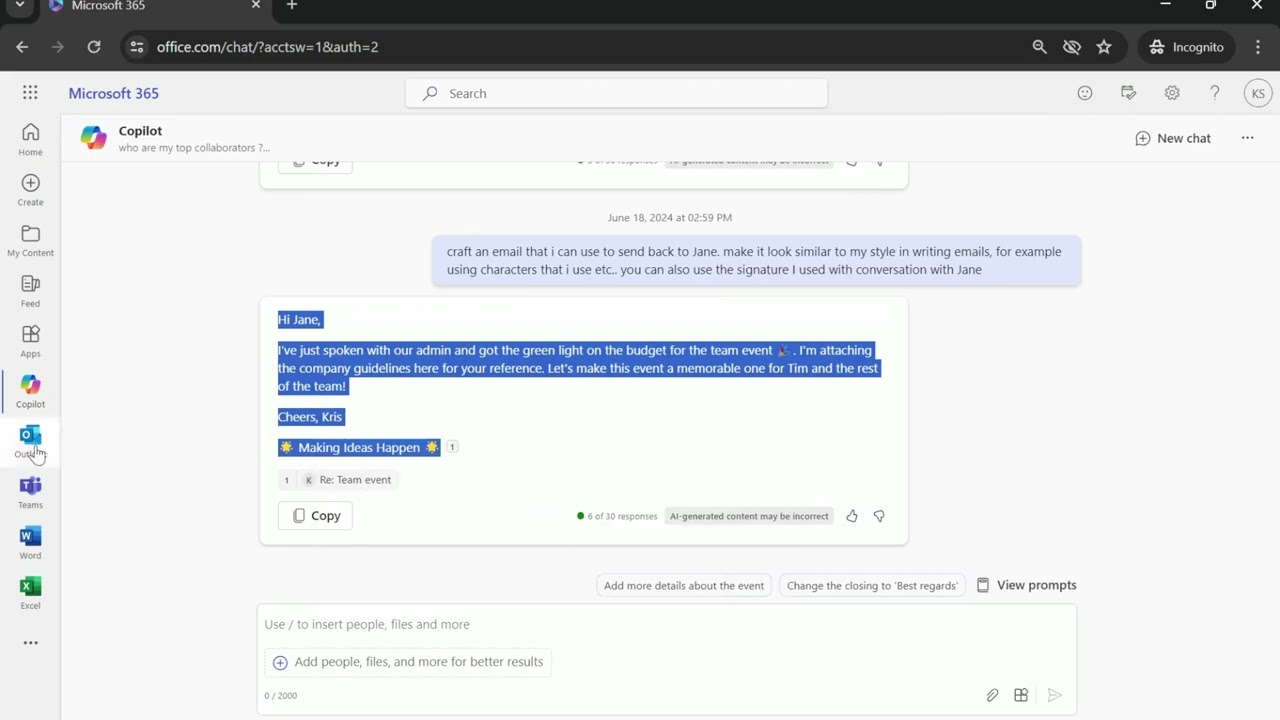

Declarative AI Agents are active in how they assist end users and are triggered by a variety of things including text prompts, emails, data changes, and react to various declarations to perform tasks.

Autonomous Agents

Autonomous AI Agents are ‘always on’ and can be triggered without human intervention and/or run on a predefined schedule.

Security from Buildtime to Runtime

When not properly secured, AI Agents can be leveraged to exfiltrate data, perpetuate social engineering and phishing attacks, and more! Security teams need to start treating AI Agents like humans and develop a purpose-built insider risk program and threat model.

Secure Your Agents

We’d love to chat with you about how your team can secure and govern AI Agents everywhere.

Get a Demo