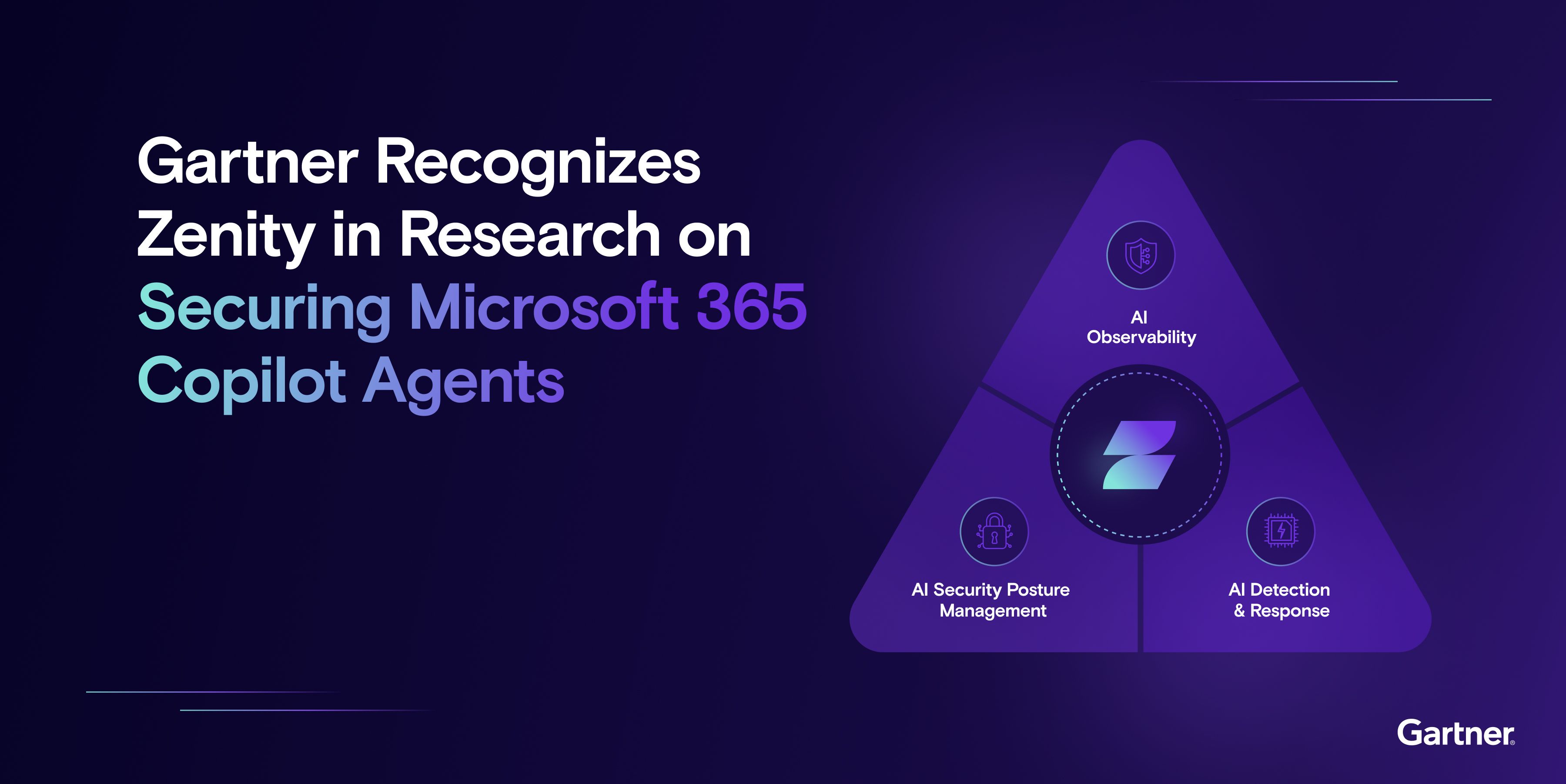

Gartner Recognizes Zenity in Research on Securing Microsoft 365 Copilot Agents

While AI Agents introduce tremendous benefits to the enterprise, they are also automatically available to anyone to create, customize, and use. Similar to the citizen development revolution, as business users of all technical backgrounds are building and using powerful AI Agents to optimize productivity, there are distinct security and compliance risks that need to be accounted for. In Gartner’s latest research, “How to Mitigate the Risks of Microsoft 365 Copilot Agent Sprawl” they outline those risks, from data leakage and misinformation to agent hijacking and runaway costs, as well as identifying solutions to help organizations navigate and secure this new frontier.

Understanding the Risks of AI Agent Sprawl

Similar to shadow AI, as enterprises rapidly adopt AI Agents like Microsoft 365 Copilot there become a huge new number of systems that security teams need to account for, that they cannot necessarily see or manage with native controls. On top of that, enterprises adopting M365 face unprecedented risks including data leakage, unauthorized access, runaway costs, and the proliferation of misinformation. Gartner predicts that by 2027, unmanaged agent sprawl will become one of the top identified risks for organizations using Microsoft 365.

Gartner also lays out that for the next two months, an eternity in the world of agentic AI, anyone, even those with Copilot licenses, can use agents in Sharepoint sites. Further, anyone can create agents via Copilot Chat (pinned to Teams) as well as use Copilot Studio, where they can sign up for the free trial, to create and customize agents that are used throughout the enterprise.

While democratizing development and use of Agents is a net positive, there need to be security and governance controls to keep up.

Practical Steps to Secure AI Agents

Zenity recommends a proactive approach to governing AI Agents that are created, customized, and used, and tailoring that approach for specifics within the Microsoft ecosystem:

- Observability for all AI Agents: Establish comprehensive and ongoing monitoring to track agent creation, usage, and behavior, ensuring transparency and rapid response to potential threats.

- Adaptive Governance: Apply governance proportional to risk. Less complex agents require lighter oversight, while high-risk agents, particularly those handling sensitive or regulated data, demand stricter controls.

- Lifecycle Management: Regularly audit AI Agents for outdated permissions, stale content, or unauthorized modifications—reducing the risk of inadvertent exposure or misuse.

While Microsoft has many strong controls, it must be understood that there is an implicitly shared responsibility model that enterprises need to account for when enabling agentic platforms across the organization. Essentially it boils down to enterprises being proactive about securing the agents themselves, not just the users or the data.

Zenity's Commitment to Securing the AI Agent Revolution

This is one of several recent pieces of analyst research that highlight the scope and urgency of AI Agent security risks and why an end-to-end security platform for AI Agents is needed. As noted in many of them, Zenity is helping organizations navigate this new reality with clarity and control. We remain focused on helping enterprises adopt AI Agents responsibly, with the right guardrails in place from buildtime to runtime.

If you're looking for guidance on how to manage AI Agent risks, visit our Security Assessment Hub, reach out to start a conversation or come visit us at RSA where Our CTO and co-founder, Michael Bargury, is leading a talk alongside Ryan McDonald, the Principal Program Manager at Microsoft, discussing how Microsoft was able to build a secure software development lifecycle for internal citizen development; which already has seen the custom development of over 50,000 agents.

All ArticlesRelated blog posts

Securing AI Where It Acts: Why Agents Now Define AI Risk

In the first round of the AI gold rush, most conversations about AI security centered on models: large language...

Advancing AI Security: Zenity’s Contributions to MITRE ATLAS’ First 2026 Update

MITRE ATLAS has become a critical resource for cybersecurity leaders navigating the rapidly evolving world of AI-enabled...

The Genesis Mission: A New Era of AI-Accelerated Science and a New Security Imperative

Innovation has always been the engine of American advancement. With the launch of the Genesis Mission, the White...

Secure Your Agents

We’d love to chat with you about how your team can secure and govern AI Agents everywhere.

Get a Demo