Artificial Intelligence Security Posture Management (AISPM): An Explainer

As AI Agents continue to revolutionize everything about how business is done, ensuring the security of these agents has become paramount. While organizations have rushed to adopt DLP processes and whitelist/blacklist policies to block the use of malicious prompts, it’s worth noting that DLP and firewalls have been around for a very long time and have proven limited in mitigating the risks of users copy/pasting sensitive information onto the internet. Examples that have short-circuited DLP approaches include things like hidden instructions laced into emails that are read by AI Assistants and agents and can result in jailbreaks. As such, we expect that traditional cybersecurity approaches will fall short in addressing the unique and sophisticated challenges posed by AI in the enterprise.

The reason for that is that the real risk with AI Agents lies not with inputs and outputs, but with what each agent does; which identities it uses, what data it accesses, what actions it takes, what other agents it communicates with, and so on. Now that AI has reached intelligent status, AI Agents are designed to reason and act like humans do, and can (and do) make human-like mistakes that have real repercussions for enterprise security. It is high time for security to catch up and design insider threat models for AI Agents in the same way that we have designed for humans for years.

This gap has led to the emergence of Artificial Intelligence Security Posture Management (AISPM), a capabilities framework designed to monitor, assess, and improve the security posture of AI systems throughout their lifecycle. In this blog, we’ll explore the key tenets of AISPM and why they are essential for safeguarding AI systems.

Context

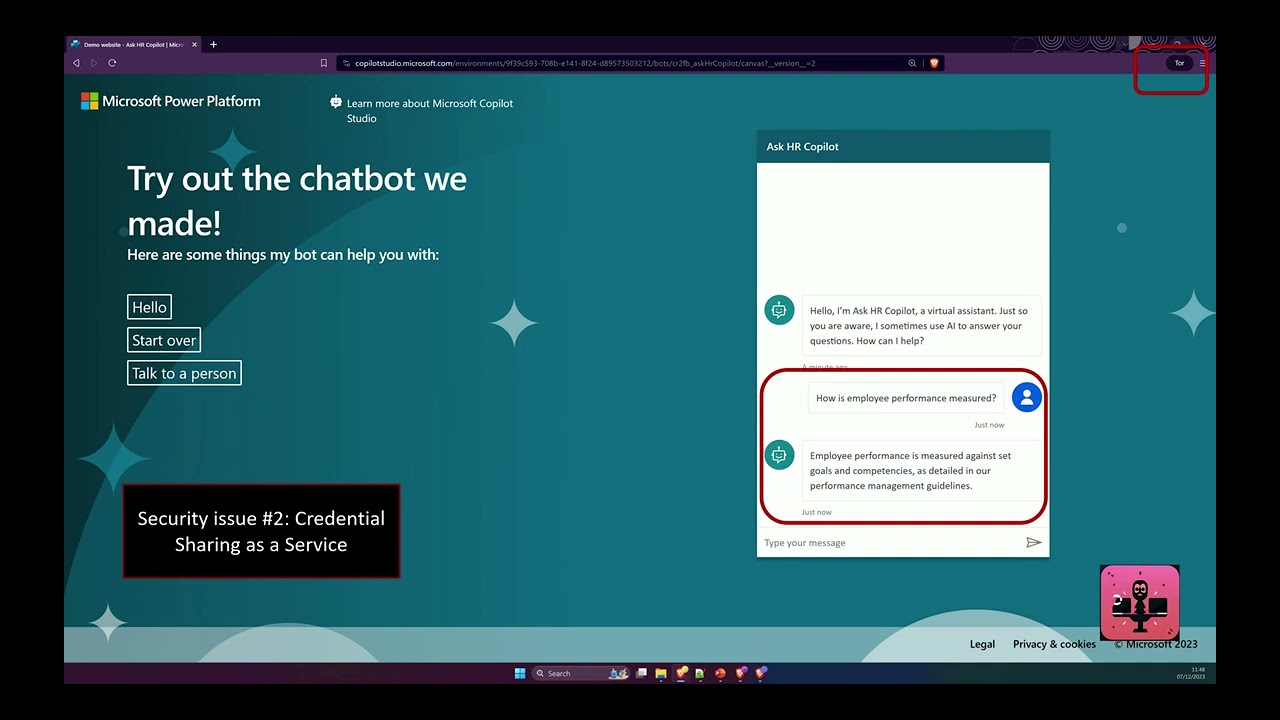

Before diving into the different tenets of AISPM, it’s worth laying the groundwork for why AISPM is so relevant for AI Agents. There have been several interesting developments in recent years that have made AI Agent development more accessible to more people. At the top of that list are low-code/no-code development platforms which serve as the foundation for Agentic AI; platforms like Microsoft Copilot Studio, Salesforce Agentforce, ServiceNow AI Agent Studio, and OpenAI Enterprise.

While the democratization of AI Agent development is a massive boost for productivity (business users can take their LoB knowledge and apply it directly to agents and apps they want built to help with day-to-day productivity), it also means that business users across the technical spectrum are responsible for what knowledge agents have, what actions they can perform, what they are prompted or triggered by, what other business applications they interact with, and so on. This is at the root of why AISPM is so critical in detecting and stopping vulnerabilities.

1. Composition Observability

In order to implement security and governance for Agents, understanding the components of what they are built with is critical. As AI Agents are built and shared in the enterprise, security teams need to be aware of all the various applications, actions, and data that Agents are accessing, processing, and leaning on as they function autonomously. This includes establishing any components of the agent that contain hard-coded secrets, are over-shared, embed the builder’s identity, and/or access sensitive data they should not. With so many third-party and custom code components that can easily be built into agents, AISPM can help illuminate these risks.

2. Continuous Monitoring

AISPM also emphasizes the importance of continuous monitoring to identify and mitigate vulnerabilities in AI systems. This includes assessing each Agent by regularly evaluating AI Agents for vulnerabilities such as adversarial attacks, data poisoning, and model inversion. It also means ensuring that any platforms, software and other components that support AI operations are designed properly.

3. Risk Identification and Analysis

Proactively identifying and analyzing risks is crucial for maintaining robust AI security posture. Key activities include threat modeling Agents like we do humans, and being able to identify threats specific to AI Agents by detecting any weaknesses or exposure in any of the individual components. This can also leverage AI Bill of Materials (AIBOM) to identify any individual risky components and setting in motion fixes to secure individual apps, flows, triggers, or connections that impact how the AI Agent operates.

4. Proactive Mitigation Strategies

AISPM involves implementing proactive strategies to mitigate identified risks. This includes applying tailored measures to AI Agents, such as robust authentication, access controls, and revoking any hard-coded secrets or embedded identities from plaintext. It also means having protocols in place to respond effectively (and quickly) to security incidents, and ensuring that security and governance teams can keep agents up to date with patches and improvements to ensure compliance and that data loss risk is managed to the best of the organization’s abilities.

5. Governance and Policy Management

Effective governance and policy management are essential for maintaining the security and ethical integrity of AI systems. Part of AISPM is enabling the fair, transparent, and accountable use of AI Agents, thus making it possible for the organization to roll out and capitalize on the full potential of AI. In order to do so, the aim of any AISPM solution or program should be to establish and enforce policies that uphold corporate boundaries and structure, and pull in involvement from stakeholders across IT, security, data, and business units.

Why is AISPM Important?

AISPM is critical for several reasons, but can be summarized in its ability to help security teams understand how and why Agents do what they do in the enterprise. Further, AISPM helps to establish:

- Unique Vulnerabilities of AI Systems: AI systems introduce new attack surfaces that traditional security measures may not adequately protect

- Compliance and Regulatory Requirements: With increasing regulations around data privacy and AI ethics, organizations need to ensure that their AI systems comply with legal standards

- Protecting Organizational Reputation and Assets: Effective AISPM helps protect an organization’s reputation and assets by mitigating the risks associated with AI systems

- Enhancing Trust in AI: By ensuring the security and ethical integrity of AI systems, AISPM enhances trust in AI technologies among stakeholders and users

AISPM provides a structured approach to managing the security posture of AI systems, addressing their unique vulnerabilities, and ensuring compliance with regulatory standards. By adopting AISPM, organizations can proactively safeguard their AI assets and build a foundation of trust and reliability as they adopt and embrace AI Agents.

All ArticlesRelated blog posts

GreyNoise Findings: What This Means for AI Security

GreyNoise Findings: What This Means for AI Security Late last week, GreyNoise published one of the clearest signals...

The CISO Checklist for the New AI Agent Reality

AI agents are now acting across SaaS, cloud, and endpoint environments with identities and permissions that traditional...

Demystifying AI Agent Security

Let me be the first to say it, this space - AI agent security and governance - can be confusing. When I joined...

Secure Your Agents

We’d love to chat with you about how your team can secure and govern AI Agents everywhere.

Get a Demo