Zenity Recognized in Gartner AI TRiSM Market Guide

Innovation, specifically the evolution of technology, has always been about expanding what’s possible or simplifying today’s complexity – sometimes both. We saw this with the internet revolution, adoption of cloud computing, remote working, low-code/no-code, and now AI is fundamentally reshaping how teams operate. While these advancements bring opportunities for organizations and push people’s creativity to new limits, they also introduce new risks.

The adoption of AI Agents is accelerating at an unprecedented pace, embedding themselves into daily workflows and decision making processes. Interestingly, it’s not just early-stage, or smaller companies driving this shift – large enterprises, especially those slow to adopt cutting edge technology, are at the forefront. But just as they have become essential to operations, they have also become high-value targets, deeply integrating into an organization’s ecosystem, connected to vast amounts of sensitive data, and capable of acting autonomously. Malicious actors now have access to an entirely new class of exploitable assets, the AI Agents.

For years, organizations have built insider threat models to mitigate risks posed by human employees. Now, they must apply the same level of scrutiny to AI Agents. As Inbar recently highlighted, IBM warned in 1979, “A computer can never be held accountable, therefore a computer must never make a management decision.” Yet today, AI Agents are entrusted with decision-making, executing tasks without human oversight. This shift raises urgent security concerns not just about risk detection but also about governance, accountability, and control.

Large enterprises are already heavily consuming, implementing and building AI agents at breakneck speed. But yet again, proper security and governance processes, and tooling are lacking. Many early efforts focus on the data layer of human interaction with AI agents (e.g. what goes into the agent, and what is the response, etc.), but organizations are realizing that it is far from sufficient. They need a more structured approach, one that applies a defense-in-depth strategy, recognizing that even internal data cannot be fully trusted, as it can be manipulated by external threats within a vast enterprise ecosystem. Organizations must focus on understanding the full business context of AI-driven sequences and actions to secure these intelligent systems effectively.

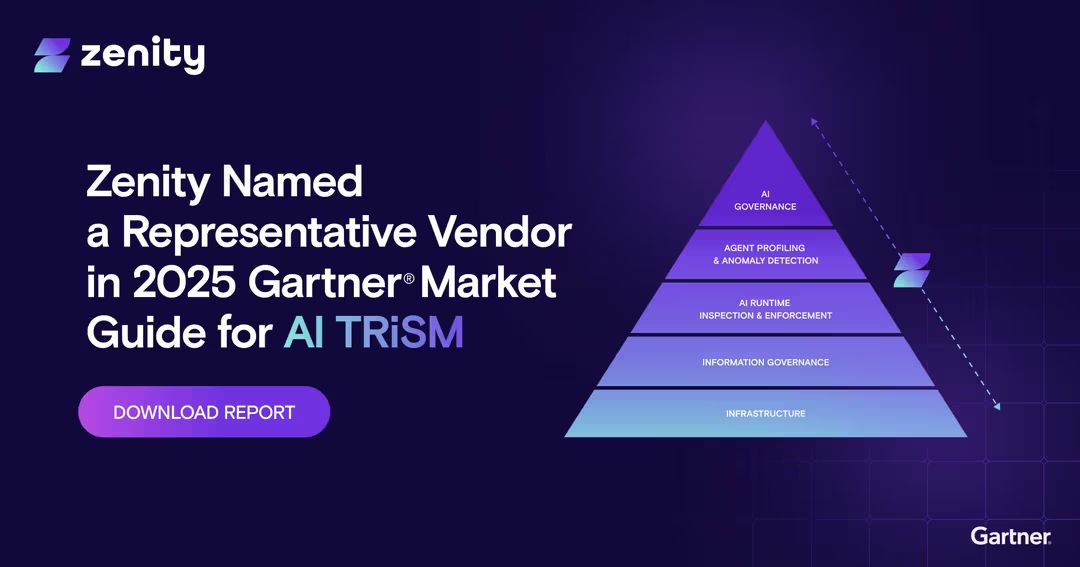

That’s why I’m proud to share that Zenity has been recognized in Gartner’s AI TRiSM Market Guide, a critical framework for defining how organizations should approach AI Trust, Risk, and Security Management (AI TRiSM).

Zenity: Pioneering AI Agent Security

AI agents can connect to any knowledge base, they reason, and they take actions- this relies on LCNC tooling for customization of Agentic platforms and for building of new, purpose built AI agents- and there is no company in the world better positioned than Zenity to afford safe adoption. We have been securing such tools and business eco systems since our inception- enabling business innovation securely.

Not only were AI Agents being developed via LCNC platforms, Agentic platforms like Microsoft 365 Copilot and Salesforce Agentforce quickly embedded themselves into daily workflows – actively making decisions, processing sensitive data, and in some cases autonomously executing workflows that are built and customized-with-low-code platforms.

Zenity’s Leadership in AI Security Innovation

By combining AI Security Posture Management (AISPM) with AI Detection & Response (AIDR), we continue to enable enterprises to:

- Gain full visibility and control over AI Agents, ensuring secure adoption by monitoring actions, data flows, and interactions.

- Detect and respond to AI-driven threats in real time, including prompt injection, agent jailbreaks, unauthorized access, intent breaking, data exfiltration, etc.

- Prevent vulnerabilities and security gaps by enforcing least privilege, securing authentication, and mitigating data leakage risks.

- Remediate and govern, enabling security teams to quarantine, block, or restrict risky AI Agent behavior without disrupting business operations.

AI is the Future, But Only if it’s Secured

AI has the potential to unlock unprecedented productivity, but it can just as easily introduce uncontrollable risk if left unchecked. Organizations can’t afford to overlook the risks as Agentic AI is taking them by storm. Gartner defines AI TRiSM as a framework that combines AI security, governance, and risk management to ensure responsible AI deployment. Within this framework, two core layers of AI security are emerging:

- AI Governance: Ensuring AI development and deployment align with compliance, ethical standards, and risk controls.

- AI Runtime Inspection & Enforcement: Monitoring and responding to AI threats in real-time, addressing issues such as prompt injection, unauthorized data access, and AI-driven attack surfaces.

Read the Gartner AI TRiSM Market Guide

As organizations continue to embrace AI – whether that’s by developing their own AI Agents, or using “off-the-shelf” Agentic platforms and agents – Zenity remains committed to ensure AI Agents remain secure and trustworthy from buildtime to runtime.

I encourage you to read the Gartner AI TRiSM Market Guide to learn how your organization can secure AI Agents at scale, before security gaps become enterprise-wide vulnerabilities.

Related blog posts

GreyNoise Findings: What This Means for AI Security

GreyNoise Findings: What This Means for AI Security Late last week, GreyNoise published one of the clearest signals...

The CISO Checklist for the New AI Agent Reality

AI agents are now acting across SaaS, cloud, and endpoint environments with identities and permissions that traditional...

Demystifying AI Agent Security

Let me be the first to say it, this space - AI agent security and governance - can be confusing. When I joined...

Secure Your Agents

We’d love to chat with you about how your team can secure and govern AI Agents everywhere.

Get a Demo