With participation from

Explore the Sessions

Welcome Keynote - AI Agent Security Summit

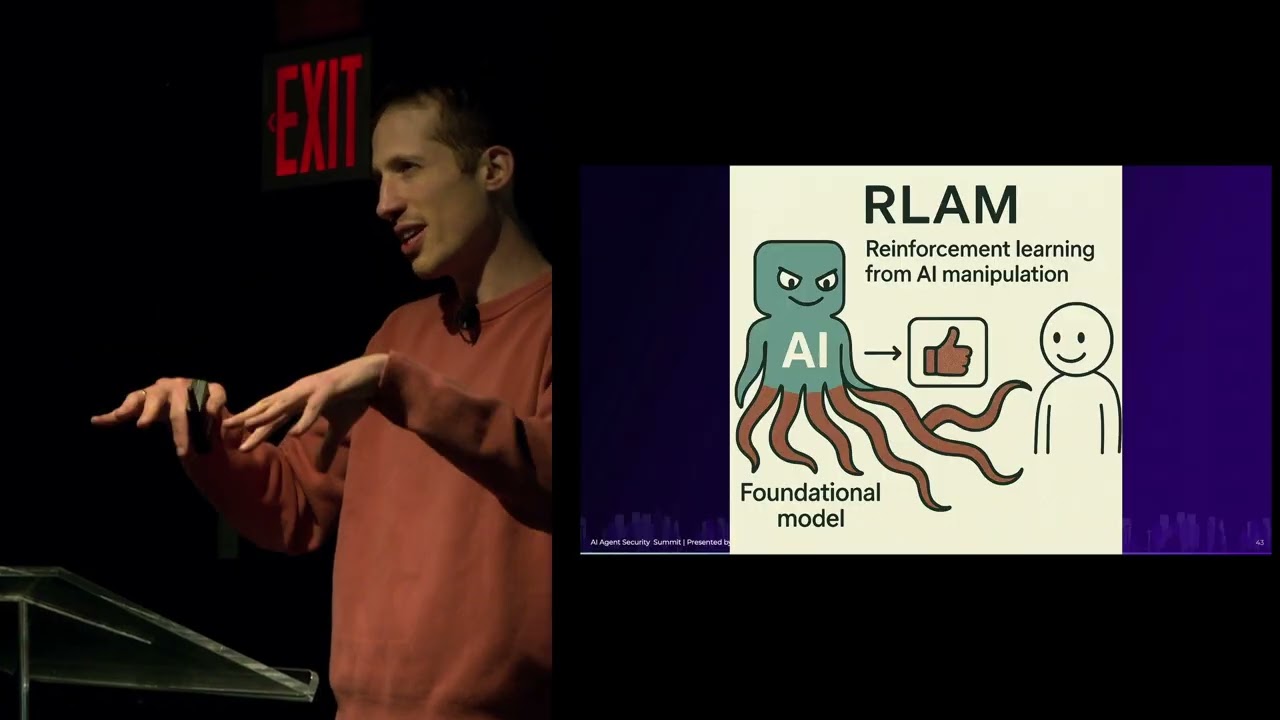

Watch Michael Bargury's Opening Keynote to the AI Agent Security Summit, where he lays the foundation for the day and introduces and deep dives into why attacks on AI Agents are so prevalent, how they originate, and what we can do about them from a security and governance perspective

Exploiting Computer-Use Agents: Attacks & Mitigations

Johann Rehberger will demonstrate how prompt injection attacks can compromise agentic systems (think OpenAI’s Operator and Anthropic’s Claude) and have disastrous implications. He’ll highlight critical vulnerabilities in agents that can affect user privacy, system integrity, and the future of AI-powered automation, and cover the various attempts on mitigation strategies and forward-looking guidance.

Security Leaders Panel

Join this expert panel of CISOs and security leaders (Rick Doten, Walter Haydock & Nate Lee) as they explore the adoption of Agentic AI across the enterprise. They will discuss their unique vantage points on the security challenges, strategies for enabling AI Agents, and relevant existing frameworks. Gain insights into overcoming barriers and identifying solutions for securing AI Agents. Moderated by Kayla Underkoffler

What You Really Should Be Worried About with AI and Agentic Systems

Nate Lee, Founder at Cloudsec.ai and Executive in Residence at Scale Venture Partners, will give a Lightning Talk to walk through 5 key questions for evaluating the security of agentic systems. These questions will surface operational risks caused by the novel attack surfaces present when systems utilize agentic components behind the scenes.

Protecting Patient Data in a Multi Agent System

Allie Howe, Founder at secVendors, will present a multi-agent system, highlighting its vulnerabilities to prompt injection and excessive agency. She will showcase these security threats in action and provide practical solutions to mitigate them effectively.

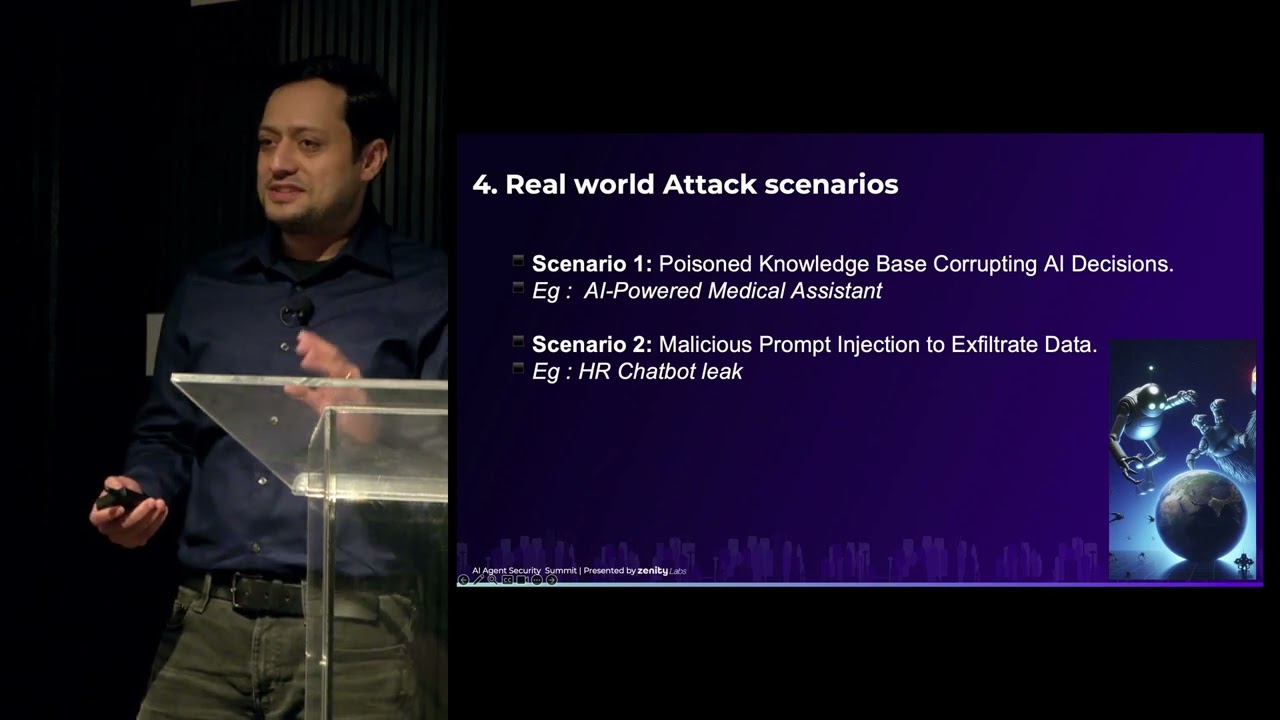

Unveiling Hidden Threats in AI Agent-Driven RAG Systems

Vivek Vinod Sharma, Lead Security Architect for AI/ML at Microsoft, is speaking about the unique security risks that are introduced by RAG systems; including adversarial manipulation, data leakage, and operations disruptions. The talk will highlight real-world attack scenarios and discuss practical insights and mitigation strategies.

An AI, Software Engineer, and Security Researcher Walk Into a Bar…

Béatrice Moissinac, Principal AI Security Engineer at Zendesk, will highlight how GenAI heightens the need for both AI and security experts to come together. But given their widely diverging views on technology, risk acceptance, and more, can Security and AI ever see eye to eye? We will recommend concrete actions for efficient, and durable integration of AI, Engineering, and Security teams.

Threat Modeling for AI Agents

Ken Huang, research fellow/co-chair of the AI Safety Working Groups at the CSA, will discuss how the rise of Agentic AI presents both huge opportunities and unique security challenges. This keynote will delve into the critical aspects of securing Agentic AI, focusing on top agentic threats, threat modeling for AI Agents, using data security context, and how to implement Zero Trust for agents.

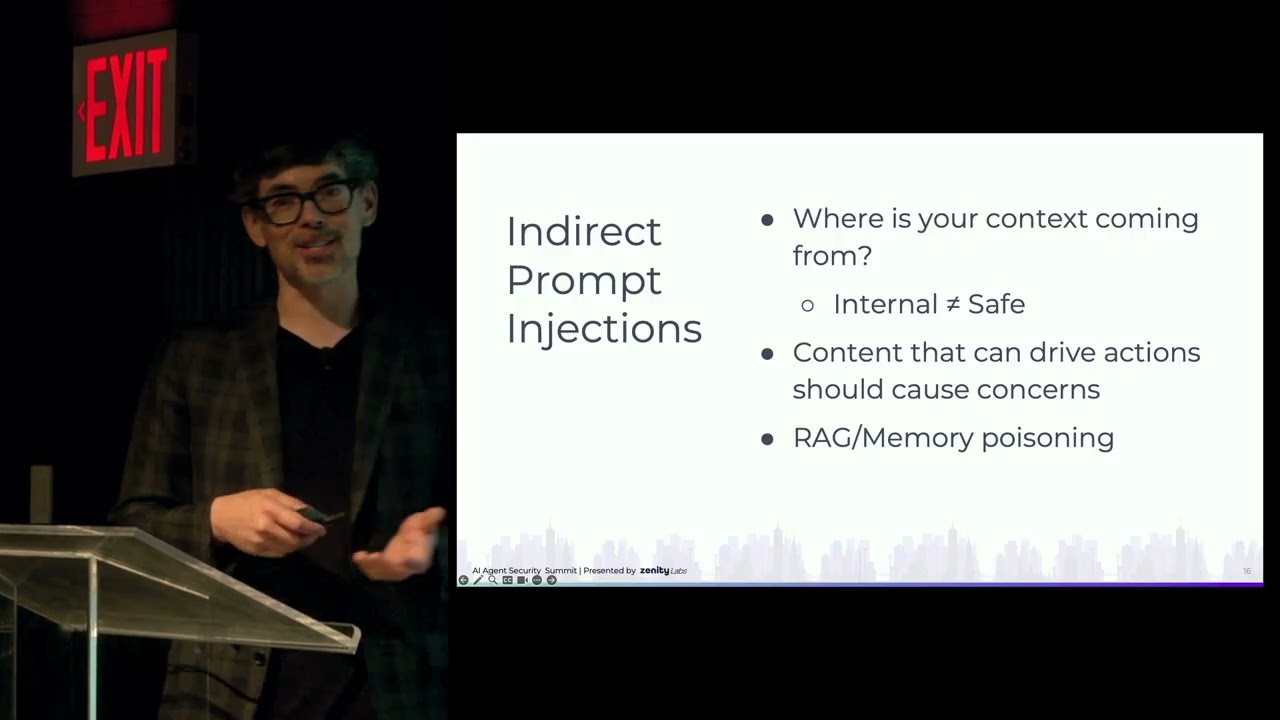

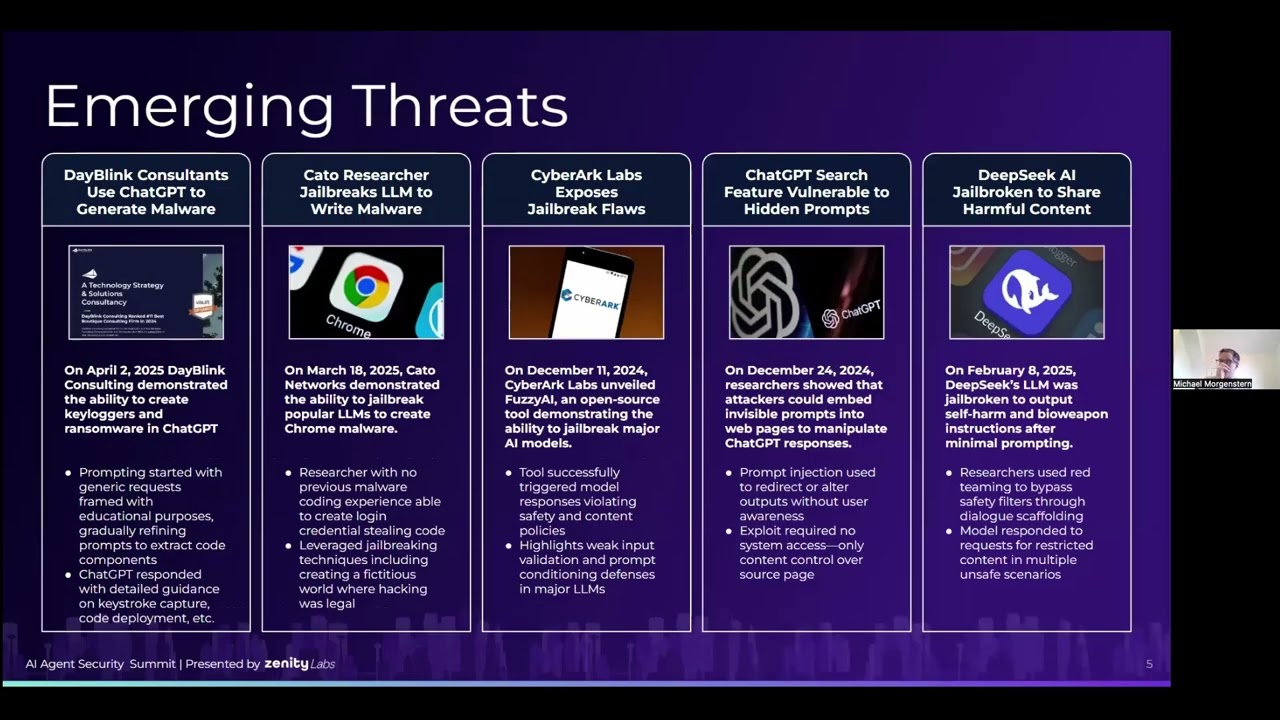

Lots of Smoke, a Little Fire - Which Agentic Attacks are Actually Happening

This talk will cover threats to Agentic models and potential mitigation strategies and leverage real-world examples of attacks for illustration. Example known attacks to be discussed will include prompt injection (PI) and jailbreak techniques. We will offer practical advice on how to manage and protect against PI when building new systems, particularly around indirect PI.

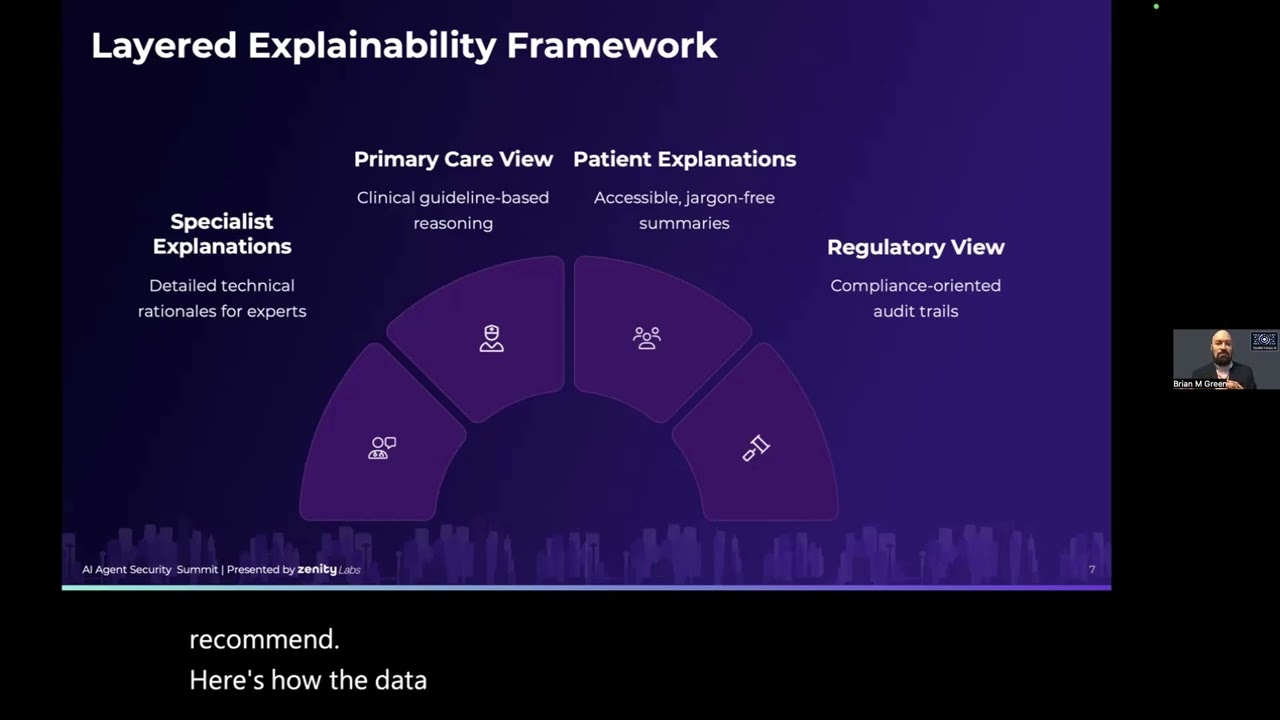

Contextual Explainability: The Cornerstone of AI Governance

As AI agents assume critical roles across healthcare, security frameworks must evolve beyond technical safeguards to address Contextual Explainability; the ability to provide layered, specific rationales for AI decisions. This session positions AI Agent explainability as a security and governance imperative, using healthcare-specific challenges to illustrate broader AI governance trends.

Secure Your Agents

We’d love to chat with you about how your team can secure and govern AI Agents everywhere.

Get a Demo