What You Missed at the AI Agent Security Summit

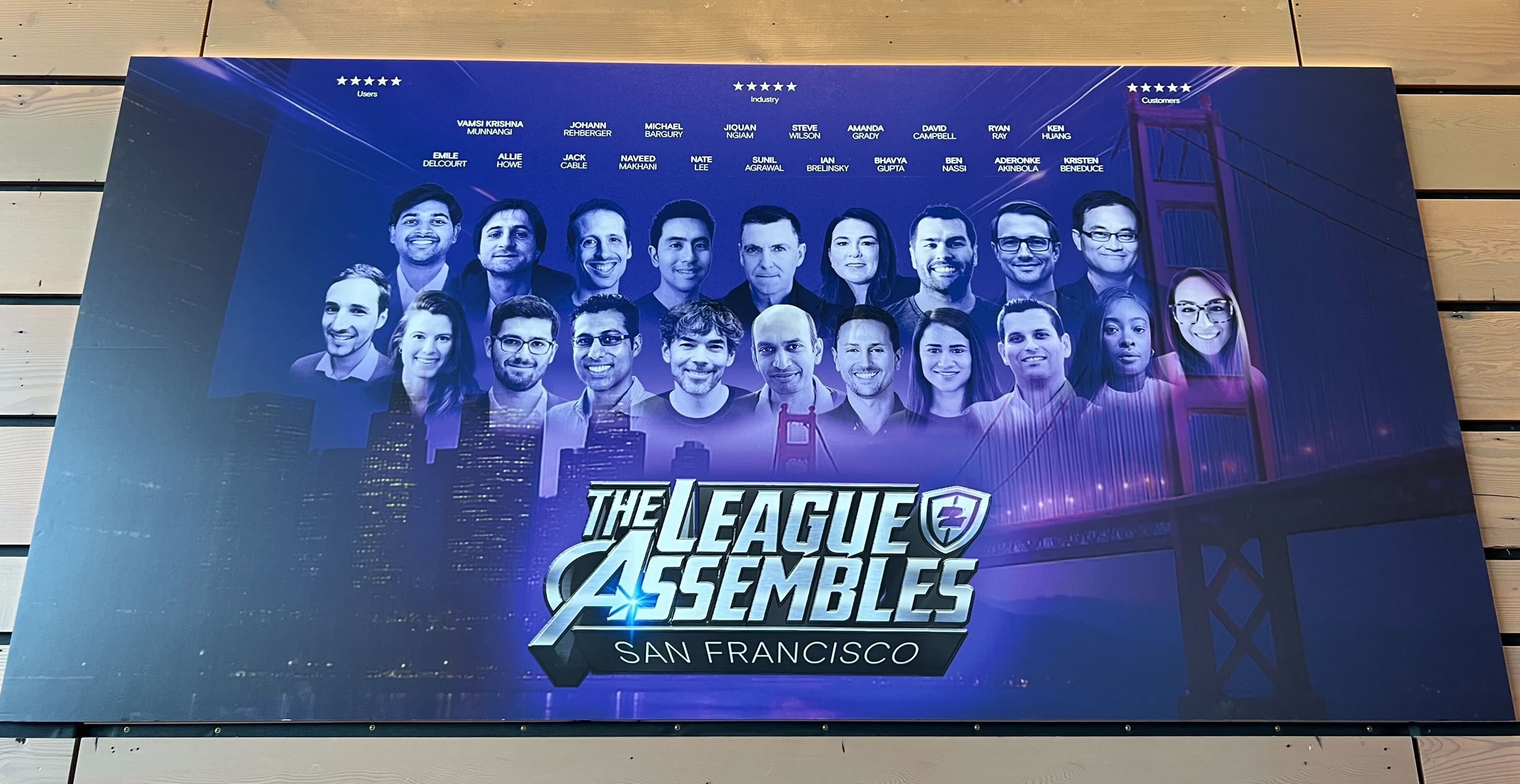

When the day wrapped up at the Commonwealth Club, one thing was clear: we are in a moment unlike anything the security community has faced before. Hundreds of practitioners and thought leaders from around the world came together, and the turnout alone showed just how urgent and relevant this topic has become.

Michael Bargury opened his keynote with a question that lingered well past the event: Are we actually making progress in securing agents? The answer depended on who you asked, but most landed somewhere between a cautious yes and a firm no.

Many presenters demonstrated how easy it still is to exploit these systems and how significant the fallout can be. But for the first time, I noticed a shift. The discussion was no longer about theory or speculation; it was about what is happening right now in production environments, what works, what fails, and how quickly the ground is shifting beneath us.

Consistencies in Practical Application

Across industries, leaders echoed many of the same ideas about what progress looks like in practice.

The first was the need for hard boundaries, clearly defining what each system can touch or execute while still keeping it useful to the business. It came up in different forms: configuration templates, and exclusions. However it is described, the principle is the same: limit scope, reduce the blast radius, and assume something will eventually go wrong.

The second theme focused on keeping a human in the loop for any action that carries meaningful impact. These tools have grown more capable in a very short time, and that capability brings new types of risk. Several speakers reinforced that human oversight is not optional; it is how we maintain accountability as automation becomes more deeply embedded.

Several speakers reinforced that human oversight is not optional; it is how we maintain accountability as automation becomes more deeply embedded. As Ryan Ray, Director of Cybersecurity at Slalom, put it, “We need to treat these agents like humans. Agents can’t be fired though.” It was a sharp reminder that while these systems act independently, accountability still rests squarely on us.

The third theme centered on ongoing visibility. Security reviews at build time are not enough. Teams need live context about what agents are doing, what data they are accessing, and where their decisions are being executed. Without continuous monitoring, issues only come to light after damage has already occurred.

Together, these takeaways point to a growing consensus. The question is no longer whether these systems need protection, but how to make that protection part of how they operate every day.

A New Level of Depth

What stood out this year was how deep the conversation went. Sessions moved far beyond surface-level concerns and focused on how teams are hardening and stress-testing these systems in practice.

One of the many standout sessions focused on lessons learned from red team exercises that intentionally pushed systems until they failed. The message was clear: traditional testing methods do not hold up in environments driven by autonomous, adaptive technologies. As Steve Wilson, Chief AI & Product Officer at Exabeam, said, “We’ve spent decades training users not to click the wrong link. Now we have to train systems not to follow the wrong instruction.” His point captured the essence of what makes this new attack surface different.The best preparation for failure is to simulate it early and often. By probing orchestration layers, memory recall, and tool integrations, these exercises revealed vulnerabilities long before they could be exploited.

That pragmatic tone carried through the rest of the event. From runtime policy enforcement and behavioral monitoring to discussions on detection and response, the focus was not on theory but on what is already being done to build resilience into production systems.

The Takeaway

The good news is that awareness has caught up. Security teams are no longer debating if this is a problem; they are already running experiments, developing policies, and learning from one another. That alone marks real progress.

Still, the complexity is growing faster than the defenses. Systems are becoming more interconnected, more autonomous, and more capable of chaining actions across platforms. With new protocols like Model Context expanding the attack surface, challenges such as tool misuse, data exposure, and poisoned workflows are becoming increasingly common.

So, are we making progress? In the best case, maybe. In the worst case, not yet.

What gave me hope was the community itself. The event brought together researchers, engineers, and defenders who share the same goal: figuring out how to secure this new class of systems responsibly. The openness and collaboration in that room made one thing clear. This is just the start of the conversation.

If the energy from this week is any sign, the next summit will have even more progress, and more stories to share. As our CTO and co-founder, Michael Bargury reflected, “The most valuable thing we built this week wasn’t a model or a tool - it was a community.” A fitting conclusion to a week that was as much about people as it was about technology.

Be sure to check out the Zenity Labs page, or sign up for early access for the on demand content

Related blog posts

Inside the Movement Defining AI Security: AI Agent Security Summit Now On-Demand

I’m still buzzing from the AI Agent Security Summit in San Francisco just a few weeks ago! From hallway discussions...

The League Assembled: Reflections from the AI Agent Security Summit

At the AI Agent Security Summit in San Francisco, some of the brightest minds in AI security and top industry leaders...

Key Takeaways for Partners from the Zenity AI Agent Security Summit

Having joined visionary leaders and top practitioners at ZenityLabs’ AI Agent Security Summit in San Francisco,...

Secure Your Agents

We’d love to chat with you about how your team can secure and govern AI Agents everywhere.

Get a Demo