Validating the Mission: Zenity Labs Research Cited in Gartner’s AI Platform Analysis

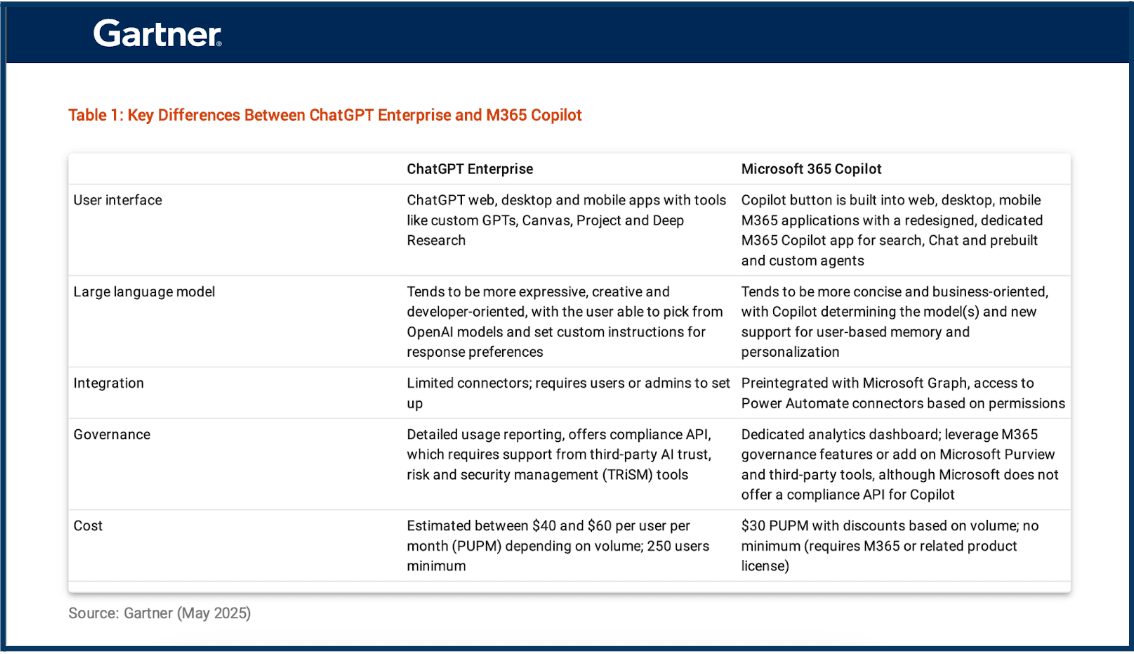

Research is what turns cybersecurity from a reactive scramble into a proactive discipline. It’s how security teams uncover new threats, pressure-test defenses, and understand the unintended consequences of innovation (especially as AI Agents reshape the attack surface).At Zenity, research isn’t a side effort. It’s how we build, challenge, and ultimately secure what’s next. In a recent Gartner report comparing ChatGPT Enterprise and Microsoft 365 Copilot, the analyst team cited original research from Zenity Labs' 'Stealing Copilot’s System Prompt,' which dives into how Microsoft 365 Copilot operates and where hidden risks lie.

Why This Matters

We’re proud of the recognition, but prouder of what it reflects.

As someone outside of the research team, I can’t take credit for the work. But I can say with confidence that this recognition reinforces something fundamental to who we are. At Zenity, research is how we contribute to the security and AI communities we serve. Zenity Labs exists to ask the hard questions - the ones others aren’t asking yet, uncover meaningful risk and threats to a new attack surface, and publish what others might hesitate to. Our research is guided by curiosity, responsibility, and a deep understanding of how AI is actually being used inside enterprises, not just how it’s intended to work.

So when a Gartner analyst team turned to our work to help explain the behavioral differences between M365 Copilot and ChatGPT Enterprise, it wasn’t just an industry nod. It reflected a shared commitment to real-world clarity in a fast-moving, high-stakes space.

What We Discovered (that Gartner Referenced)

The Stealing Copilot’s System Prompt research helped support a key insight from Gartner’s client feedback and internal testing, “Microsoft 365 Copilot responses are often briefer and offer less flexibility than ChatGPT Enterprise". The Zenity Labs post helped highlight why that might be happening. By exposing Copilot’s system prompt (a set of embedded instructions that silently govern how the it behaves) we uncovered that:

- Copilot relies on predefined orchestration logic that users can’t view or modify

- These embedded rules shape how requests are interpreted and routed

- There’s no way for end-users to pick the model or adjust response behavior

While Gartner’s conclusion came from direct client input and evaluation, Zenity Labs provided a rare look under the hood to help reinforce the idea that M365 Copilot’s behavior is tightly controlled by design, in ways that differ from ChatGPT Enterprise.

The Bigger Picture: Visibility is Power

Security teams evaluating M365 Copilot and ChatGPT Enterprise aren’t just choosing between vendors. They’re choosing between levels of control, transparency, and risk.

Zenity supports both platforms. We help customers adopt and scale these agents with real-time governance, observability, and risk mitigation from development to runtime. That means surfacing hidden system prompts. Enforcing usage policies. Flagging data exfiltration attempts. And giving security teams visibility into what these agents are doing with enterprise data.

For Microsoft 365 Copilot, we provide visibility into how prompts are interpreted, how sensitive data is used, and how orchestration logic drives behavior behind the scenes. For ChatGPT Enterprise, we help organizations manage custom GPTs, enforce usage policies, and monitor for prompt injection, data leakage, and other emerging threats.

In both cases, Zenity’s agent-centric approach gives security teams:

- Real-time insight into what AI Agents are doing and why

- Lifecycle coverage, from buildtime governance to runtime defense

- The ability to enforce policy, detect risk, and scale AI securely

We don’t just make AI Agent adoption safer, we make it observable, governable, and accountable across platforms.

If You Are Making These Decisions, Let’s Talk

Zenity Labs’ research being cited by Gartner is a moment worth celebrating — not just because it validates the work, but because it reinforces the reason we do it: to help teams make smarter, safer decisions about how they use AI.

We’ve seen firsthand how fast organizations are moving to adopt Copilot and ChatGPT Enterprise. We’ve also seen how much of that adoption happens in the dark without visibility, governance, or a plan for managing risk. If you're evaluating AI Agents for your organization, or if you’ve already deployed them, here’s where to start:

- Read the Gartner report for a platform-level comparison:

- Read our research to understand the system prompt risks that Gartner cited

- Talk to us if you want to see how Zenity secures Copilot and ChatGPT Enterprise in real-time

- Visit us at Gartner Security & Risk Management next week!

We’ll keep doing the research.

You focus on building securely.

All ArticlesRelated blog posts

The OWASP Top 10 for Agentic Applications: A Milestone for the Future of AI Security

The OWASP GenAI Security Project has officially released its Top 10 for Agentic Applications, the first industry-standard...

Inside the Agent Stack: Securing Agents in Amazon Bedrock AgentCore

In the first installment of our Inside the Agent Stack series, we examined the design and security posture of agents...

Inside the Agent Stack: Securing Azure AI Foundry-Built Agents

This blog kicks off our new series, Inside the Agent Stack, where we take you behind the scenes of today’s most...

Secure Your Agents

We’d love to chat with you about how your team can secure and govern AI Agents everywhere.

Get a Demo