Demystifying AI Agent Security

Let me be the first to say it, this space - AI agent security and governance - can be confusing. When I joined Zenity, I was new to “agents”; so new that I thought we were talking about endpoint agents (to be fair, my background is in XDR/SIEM). Laughs aside, if you’re still figuring it out, you’re in good company.

This post is meant to be your starting point - the breakdown that helps you get to that “aha” moment - because what’s different isn’t that cybersecurity needs to be reinvented. It’s that with AI agents, both what you’re defending and how you defend it are variables. That’s why finding the right solution feels harder, and why even orienting your strategy can seem daunting.

So let’s start with why this is familiar territory, and then get into the nuance that matters.

Why AI agent security isn’t different - just more nuanced

Practically speaking, AI agents are a new attack surface - and proven security strategies still apply. Before compromise, you assess, harden, and configure (think of risk assessment, security posture management, vulnerability management). During compromise, you detect, contain, and respond (think of detection, inline prevention, incident response). That part hasn’t changed. What has changed is the context (and the autonomy) - agents reason, retrieve knowledge, and take actions - so your controls must evolve to see and shape those steps.

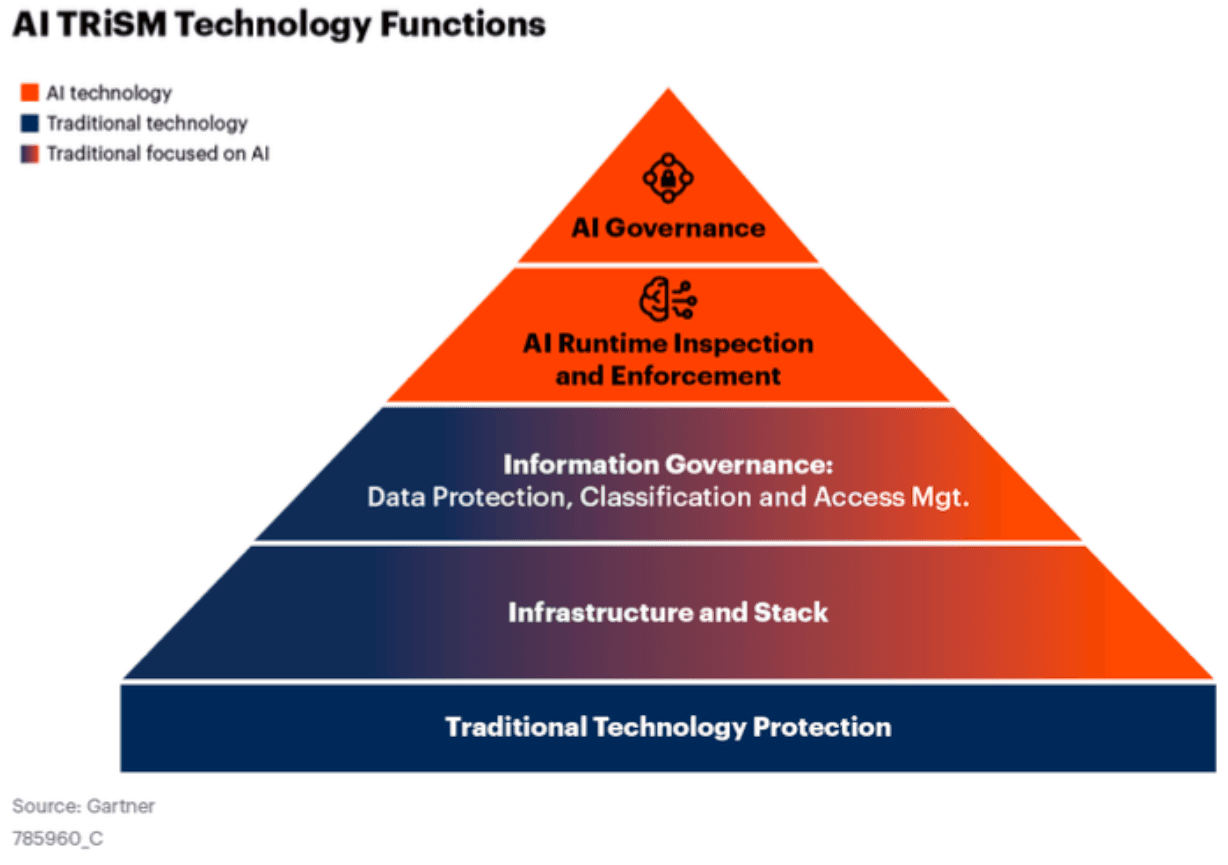

That’s the strategy; now let’s talk about the security tech stack. Gartner’s AI TRiSM (AI Trust, Risk, and Security Management) is a helpful way to map what you already own and where AI-specific controls belong. Gartner defines AI TRiSM as a framework with key layers of technical capabilities that enforce enterprise AI policies for governance, trustworthiness, security, and compliance. In the pyramid, your foundational controls (identity, endpoint, network, cloud) remain in place, with information-governance and infrastructure/stack capabilities layered above. At the top sit the AI-specific control planes, AI Governance and AI Runtime Inspection & Enforcement. To make the correlation clear (and yes, I’m oversimplifying - stay with me here) think of AI Governance as the “before compromise” solution (e.g. policy enforcement, risk management, etc.) and AI Runtime Inspection & Enforcement as the “during compromise” solution (e.g. detecting violations, anomalous behavior, etc.).

AI Agents can follow the traditional security strategy you trust, but they do require purpose-built controls. Keep the foundations that protect your environment and data, then layer AI-specific governance and runtime enforcement to close the blind spots and gaps.

Anatomy of an agent and where risks enter

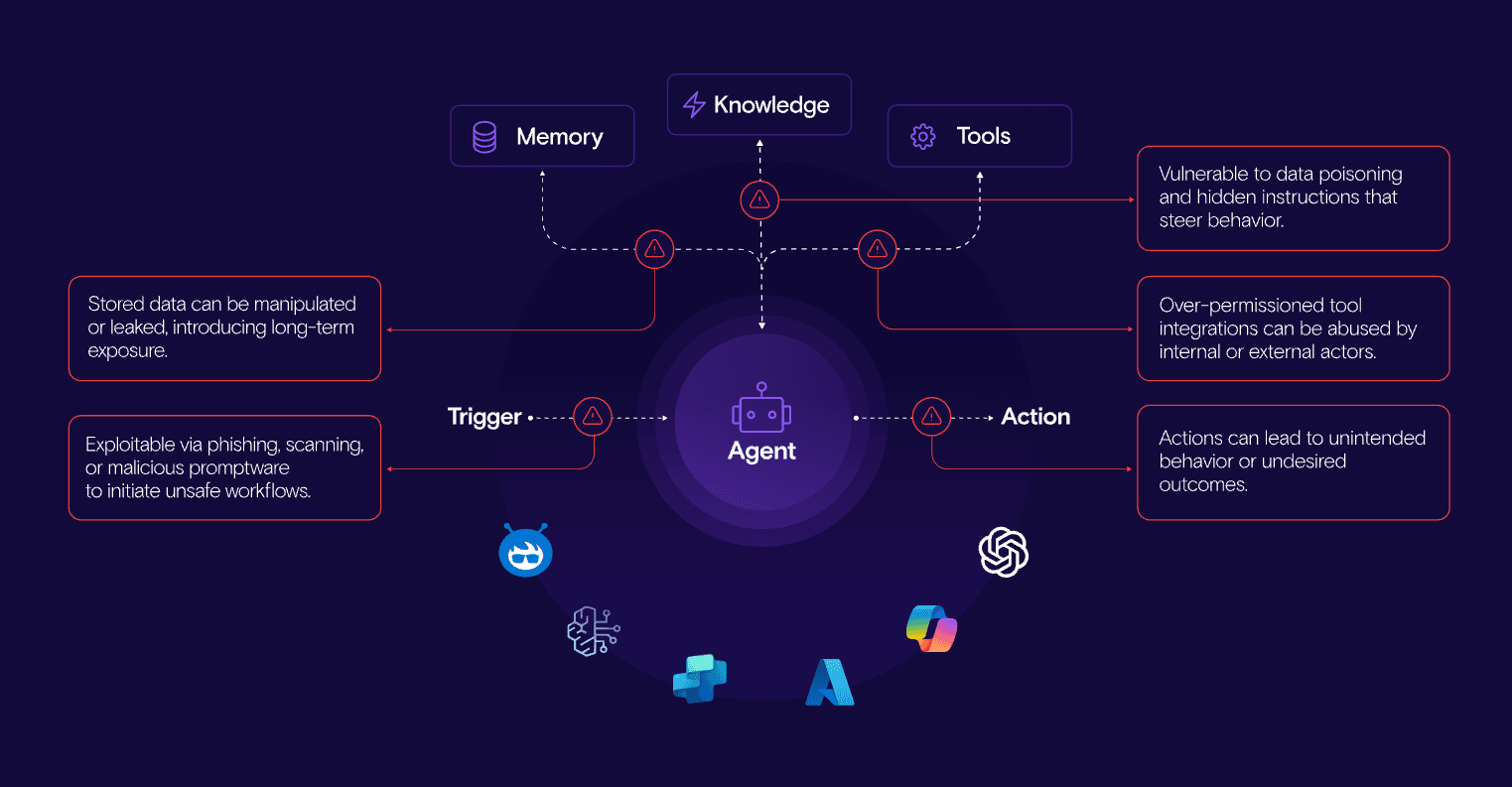

Why purpose-built controls? Because of how AI agents are built and operate. Here’s a quick 101. An AI agent makes decisions, touches sensitive systems, and invokes tools that change real data (autonomously or semi-autonomously). Under the hood, every agent has memory (state that persists), knowledge (what it can retrieve - docs, databases, RAG), and tools (the integrations or plugins it can call).

A trigger is the event that initiates and routes work to the agent. It can be a user prompt, another agent’s message, a webhook, or a schedule. The trigger kicks off a goal-oriented workflow - the agent reasons, optionally retrieves knowledge, may invoke tools, and produces an action. An action can be read-only (a response or summary) or write operations (send an email, edit a file, update a record).

You’ll see agents in three deployment shapes. SaaS-managed agents are vendor-owned assistants or agents you build and configure in the vendor’s SaaS platform (e.g., M365 Copilot, Copilot Studio, Salesforce Agentforce). Home-grown agents are built and owned by your teams using services like AWS Bedrock, Vertex AI, or Azure AI Foundry, giving you full control over orchestration, tool permissions, evaluation, and guardrails - maximum flexibility and responsibility. Device-based agents are local assistants such as GitHub Copilot, Cursor, or Claude desktop.

Here is where the risk comes in. Memory creates long-lived exposure, once sensitive details or poisoned instructions land there, they persist. Knowledge sources can be tampered with or seeded with hidden directives that steer behavior. Tools are often over-permissioned; a mis-scoped connector lets a well-meaning agent make wholesale changes it shouldn’t. Triggers can be abused - phishing or “promptware” that silently kicks off unsafe workflows. And actions, if unsupervised, can scale mistakes quickly (bulk edits, destructive automations, or quiet policy violations that look like normal work). These entry points can lead to AI-specific attacks like direct and indirect prompt injection, RAG poisoning, privilege escalation, etc.

Ultimately, it’s the same categories of bad actors, plus attackers who game the agents. This is why traditional tools don’t provide you with full coverage - they don’t capture what triggered the agent, what it retrieved, which tools it called, and the action it took. Purpose-built controls restore that visibility and give you enforcement at each step.

Approaches to securing AI agents

Here’s where many teams get confused - vendors secure and govern AI agents in different ways. Your goal is simple - choose a vendor (or vendors) that delivers AI Governance and AI Runtime Inspection & Enforcement (per Gartner) in a way that will hold up as the space evolves. To get there, it helps to recognize the three common approaches you’ll see (model-based security, I/O or browser-based, and agent-centric) so you can evaluate vendors with confidence and pick the right partners for your organization.

Model-based security

Start at the core: the large language model. The aim is to make the model behave safely so it’s harder to jailbreak, less likely to produce unsafe content, and more resilient to prompt manipulation.

Where it runs out of runway is everything that happens beyond the model runtime. Model controls don’t observe enterprise permissions, tool scopes, or downstream actions. They also tend to be tied to a provider/runtime, which can limit portability as your architecture evolves. When you assess this layer, look for repeatable safety tests and evidence that policies survive model or provider changes.

I/O or browser-based security

This lens watches the edges, what goes into and out of the agent (think prompt filtering and blacklisting). These controls deliver quick wins; they reduce accidental data exposure and catch obvious unsafe patterns during build and test.

The tradeoff is visibility. Edge filters are mostly reactive and blind to the decision chain and to what happens once tools start running. At scale they can fragment across channels and teams. Strong options here give you consistent, reusable policies and detailed logs so filtered events are easy to review.

Agent-centric security

This approach focuses on the “entity” that is the AI agent, specifically securing and governing how the agent behaves, what toolset invokes, how it communicates across systems, etc. across its lifecycle. At build time, check posture before anything reaches production. At runtime, understand the full activity path to detect behavior anomalies or malicious activity.

Agent-centric controls provide the plane traditional tools miss - the reasoning path and the action path together. They align with enterprise principles (least privilege, segmentation, continuous monitoring, incident response) and are designed to adapt as models, connectors, and agent behaviors change.

Choose what will age well

Now that I’ve shared the “aha” moments that helped me demystify this space, I hope you’ve got a solid starting point for how to think about all of this. One last thing I’ll leave you with - in a space that moves this fast, keep a simple checklist that blends what we covered with what your organization needs.

- Strategy alignment: Does the solution deliver AI Governance and AI Runtime Inspection & Enforcement?

- Coverage: Does the solution span both buildtime and runtime across SaaS-managed, home-grown, and device-based agents and assistants?

- Adaptability: Will it evolve with new models, connectors, and behaviors?

- Security stack: Can it integrate with existing enterprise controls like a SIEM?

- Visibility: Does it offer activity path visibility and audit-ready logs?

You don’t need to relearn security. You need visibility and control where traditional tools can’t reach - what the agent knows, what it can touch, and what it actually does. Evaluate through that lens and choose the partner that can deliver AI Governance and Runtime Inspection & Enforcement today (and keep pace as the landscape evolves).

All ArticlesRelated blog posts

GreyNoise Findings: What This Means for AI Security

GreyNoise Findings: What This Means for AI Security Late last week, GreyNoise published one of the clearest signals...

The CISO Checklist for the New AI Agent Reality

AI agents are now acting across SaaS, cloud, and endpoint environments with identities and permissions that traditional...

Zenity Joins the Microsoft Security Store: Securing AI Agents Everywhere, Together

We’re thrilled to share that Zenity is included in the unveiling of the Microsoft Security Store Partner Ecosystem....

Secure Your Agents

We’d love to chat with you about how your team can secure and govern AI Agents everywhere.

Get a Demo