Securing the future of AI Agents: Reflections from the Microsoft Build Stage

Standing on stage at Microsoft Build, surrounded by innovators shaping the future in the era of AI Agents, I felt equal parts inspired and responsible. Inspired by the rapid momentum around AI, and responsible for raising a flag about something we don’t talk about enough - how we secure the very systems that are now acting on our behalf.

This post isn’t a recap, rather a continuation, a chance to go deeper into the story I shared (and the one we’re still writing.)

AI Agents Are Changing Everything - Including the Way We Think About Security

AI Agents aren’t just another wave of automation. They’re a paradigm shift in how work gets done. They can reason, take action, and operate with unprecedented autonomy. That power is what makes them so valuable…and so risky.

Across industries, we’re seeing AI Agents accelerate decision-making, streamline processes, reduce operational overhead, and give teams a competitive edge. They’re already helping organizations do things like:

- Summarize complex documents and analyze data sets

- Automatically trigger business workflows

- Interact with customers across channels

- And much more - all with minimal human intervention

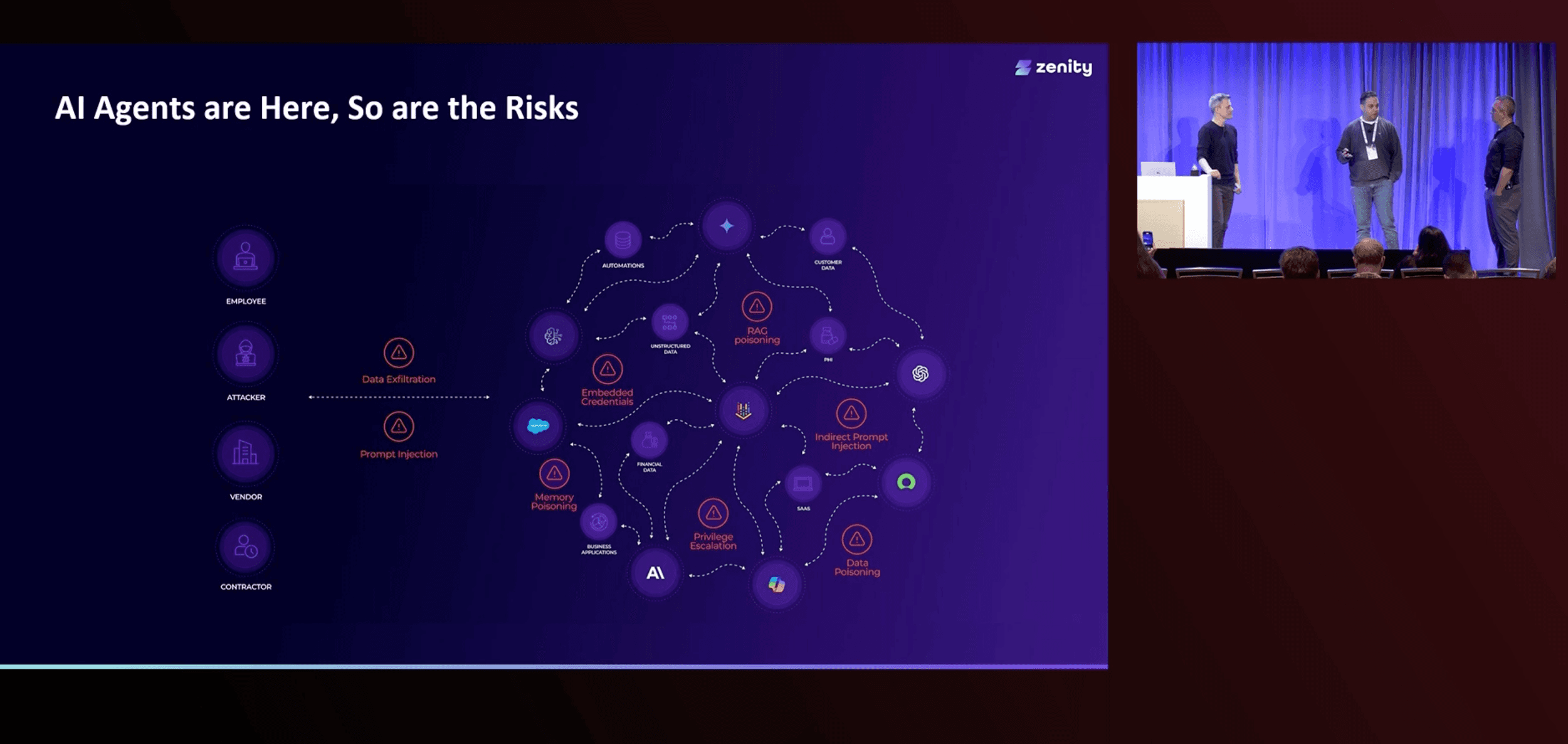

But their power is matched by complexity. These agents don’t behave like traditional software, they evolve with every prompt. They act across tools, departments, datasets, and even other agents. They’re built quickly, often by teams outside of traditional software development or engineering teams. Even when built using low-code/no-code tools like Copilot Studio, their risk surface is dynamic, fragmented, and difficult to track.

And here’s the hard truth, most organizations have no idea how many AI Agents they’re running, what they do, or what data they touch. That's why we believe security can’t be bolted on later. This is the moment for security teams to claim a seat at the table - not to block adoption, but to foster fearless innovation by putting the right guardrails and controls in place from the start.

What We Shared on Stage: Zenity’s Purpose-Built Approach

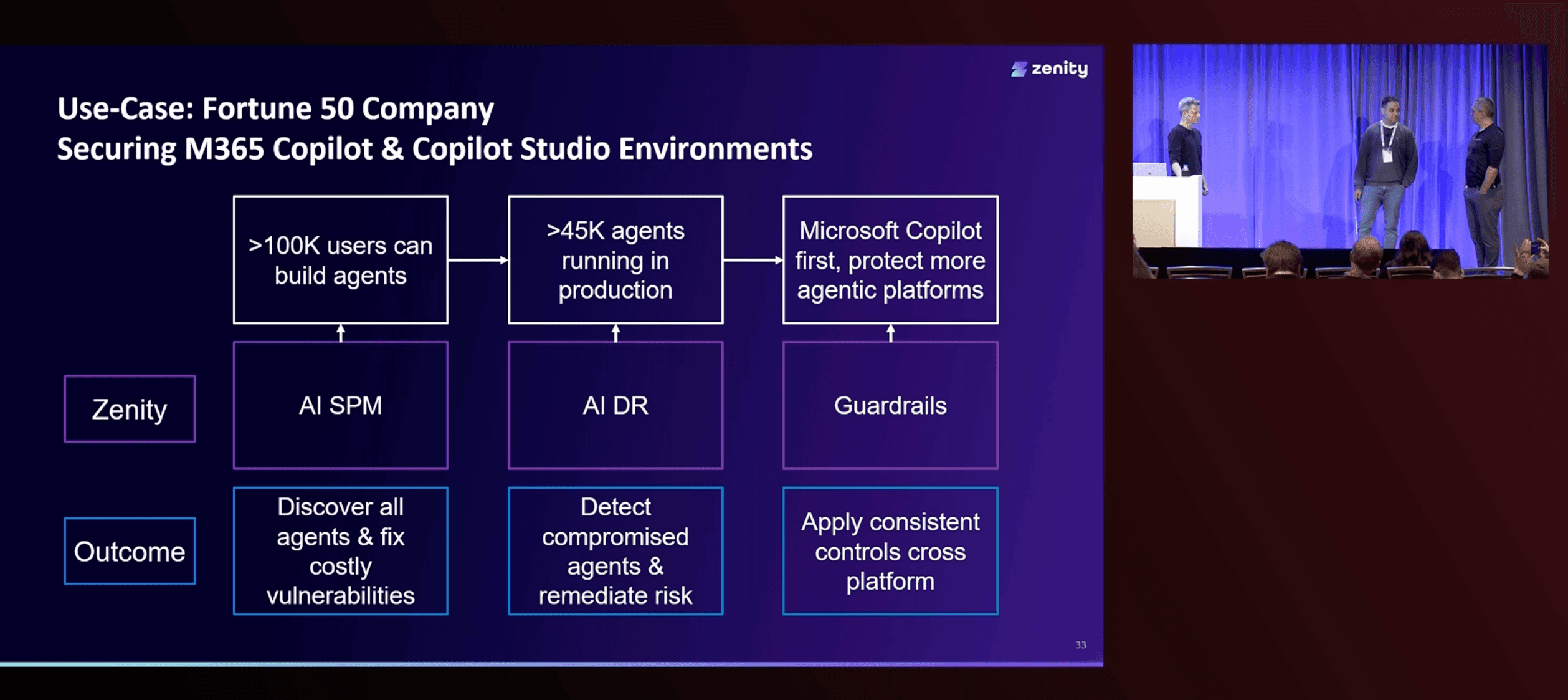

Last week, I had the privilege of joining Microsoft on stage at Build to share how Zenity is helping enterprises realize the full potential of AI Agents, securely at scale. Zenity is the first, end-to-end security and governance platform focused exclusively on AI Agents. That means:

- Agent-centric focus: we secure the agent itself - not just the model, prompt, or output. Zenity understands what the agent is built to do, how it behaves, and what it touches.

- Full lifecycle coverage: Zenity protects agents at every stage, from development intent to runtime behavior.

- Seamless Microsoft integration: Whether in Copilot Studio, or Microsoft 365 Copilot, we plug directly into your ecosystem using existing APIs - no heavy lift, no friction.

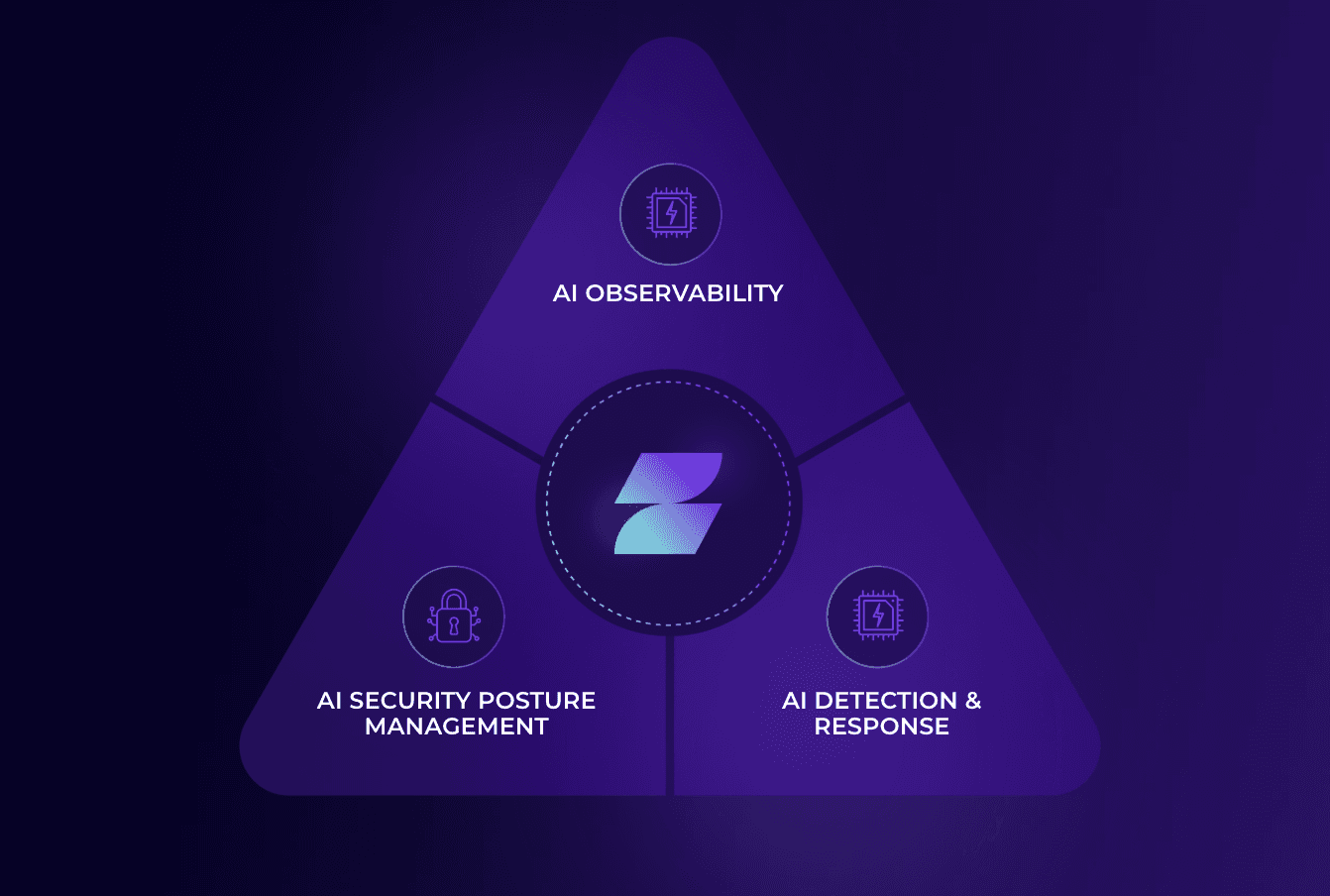

Our platform combines three critical pillars:

- AI Observability: see each agent across your org, understand what it’s doing, and trace its interactions.

- AISPM (AI Security Posture Management): Ensure configurations, access, and usage align with your policies.

- AIDR (AI Detection & Response): Monitor behavior in near-real time, catch threats early, and take action when agents drift from expected outcomes.

This is how we help organizations unlock the power of AI Agents, without losing control

What We Didn’t Have Time to Share

There’s only so much you can say in three minutes. What I couldn’t fully express on stage is just how fundamentally different, and important, AI Agent security is. Zenity is defining what security and governance looks like for this next wave of AI innovation - AI Agents.

AI Agents represent a new attack surface, and securing them requires a new approach. Traditional tools were built for users, apps, or infrastructure, not for entities that behave independently, evolve rapidly, and operate across disconnected environments. We designed Zenity from the ground up to secure agents themselves, not just the models behind them or the prompts they receive.

Our platform combines AI Observability, AI Security Posture Management (AISPM), and AI Incident Detection & Response (AIDR) into one cohesive system. We help security teams define, enforce, and automate policies that keep agent usage aligned with enterprise standards. That means preventing agents from accessing sensitive systems they shouldn’t be accessing, flagging when configurations aren’t secure, and detecting abnormal or malicious behavior, all without slowing innovation or adding unnecessary friction.

Lastly, with the work of the Zenity Labs team, we’re not just focused on today’s risks, we’re looking at what’s next. The team is actively tinkering, researching, and helping shape the broader industry conversation - most recently at our AI Agent Security Summit and presentations at RSAC 2025. Securing AI Agents isn’t just about compliance, it’s about enabling organizations to move faster, smarter, and more confidently.

Where We’re Headed: A Glimpse Into Zenity’s Future

AI Agents are just the beginning. The way we work is being redefined and with it, the way we think about security must evolve.

We're expanding our capabilities, deepening our Microsoft integrations, and continuously evolving the platform to address emerging use cases and risks. Stay tuned, we’re just getting started.

Let’s Keep the Conversation Going

Wherever you are in your AI Agent adoption journey, either thinking about your strategy for securing them, trying to figure out how to wrap your head around all of this, or just want to chat, I’d love to connect. These are uncharted waters, and collaboration is how we navigate them.

The AI Agent era is here. Let’s make sure we secure it, together.

Related blog posts

Inside the Movement Defining AI Security: AI Agent Security Summit Now On-Demand

I’m still buzzing from the AI Agent Security Summit in San Francisco just a few weeks ago! From hallway discussions...

The League Assembled: Reflections from the AI Agent Security Summit

At the AI Agent Security Summit in San Francisco, some of the brightest minds in AI security and top industry leaders...

Key Takeaways for Partners from the Zenity AI Agent Security Summit

Having joined visionary leaders and top practitioners at ZenityLabs’ AI Agent Security Summit in San Francisco,...

Secure Your Agents

We’d love to chat with you about how your team can secure and govern AI Agents everywhere.

Get a Demo