From IDE to CLI: Securing Agentic Coding Assistants

Today we’re excited to announce that Zenity now protects the most powerful, enterprise-critical coding assistants - Cursor, Claude Code, and GitHub Copilot - from build-time to runtime.

As AI becomes a first-class developer tool, Zenity gives security teams the visibility and control they need to safely embrace coding assistants everywhere they’re used, in IDEs, CLIs or in the cloud.

Why this matters - fast

Coding assistants aren’t a niche anymore. They’re embedded in daily developer workflows, and recent estimates put AI-generated code at more than 40% of new development worldwide. That scale is a huge productivity win - but it also creates new, highly exposed attack surfaces:

- Coding assistants can read and edit code, access local files (.env, keys, secrets), all the while utilizing external tools and Model Context Protocols (MCPs).

- They can act with broad agency like running code, invoking services, or interacting with ticketing and source control systems.

- High-risk features like auto-run, sub-agents, agent skills & tools, and third-party MCP servers multiply risk.

- Unmanaged or shadow coding assistant usage makes it impossible to enforce consistent security controls.

Put simply, organizations must adopt these coding assistants - but they cannot do so safely without new, AI-aware security controls. That’s the gap Zenity closes.

The reality in enterprises

Traditional security tools were built around different primitives: endpoints, containers, network flows, and static application vulnerabilities. Coding assistants fundamentally change the threat model because they introduce agent-centric attack paths directly into the development environment - one of the most sensitive areas of the enterprise.

These assistants expand the opportunity for indirect prompt injection through poisoned tool outputs, compromised packages, manipulated web content, or malicious MCP responses that silently alter agent behavior. Attackers can chain low-privilege misconfigurations into broader privilege escalation by hijacking agents or weakening their guardrails. At the same time, widespread adoption creates massive shadow-AI footprints across developer laptops and cloud systems that sit outside the visibility of traditional AppSec, endpoint, or cloud security tools.

If security teams can’t see and control how coding assistants are configured and executed from build-time to runtime, critical doors remain open.

Zenity’s Coverage for Coding Assistants

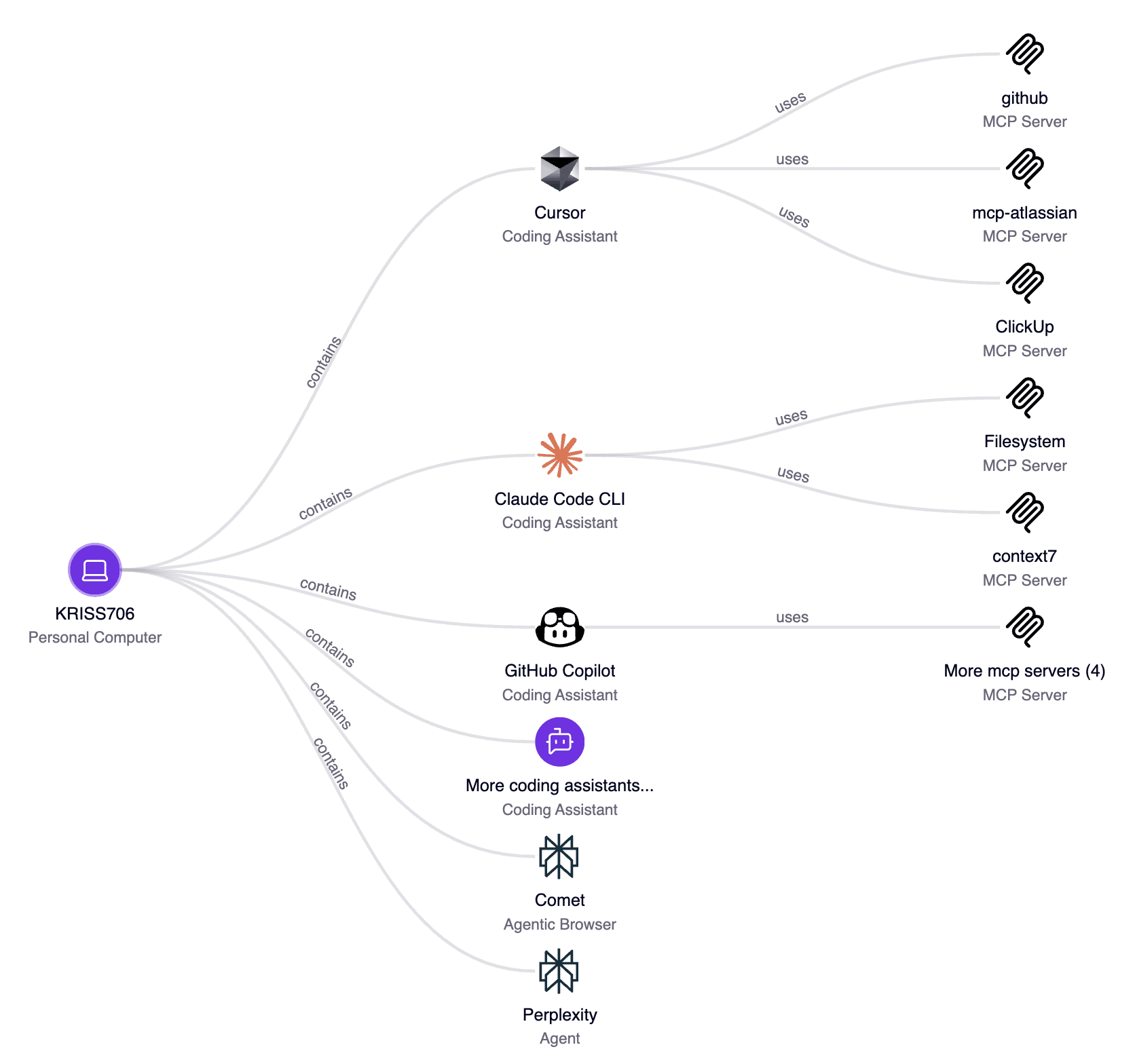

Zenity’s expanded coding assistant support delivers enterprise-grade protection across the most widely adopted and business-critical developer tools. Cursor, Claude Code, GitHub Copilot, and other leading assistants are now governed as enterprise agents within Zenity’s platform, using the same agent-centric security model already applied across SaaS and endpoint environments.

Zenity discovers and inventories coding assistants, associated MCPs, and shadow AI assets across developer endpoints and workstations, enriching each asset with configuration details, permissions, sub-components, and connected tools. This provides security teams with immediate insight into real exposure across the environment.

From there, Zenity enforces security controls across the full assistant lifecycle. Build-time instrumentation allows teams to prevent unsafe configurations—such as unapproved MCP servers or insecure authorization policies—before execution. At runtime, Zenity observes assistant activity and mediates MCP calls and tool invocations, blocking misuse in real time. Security teams can build policies in Detect mode to understand impact, then move to Prevent mode with immediate enforcement and contextual, in-chat guidance that preserves developer workflow.

Solving real risks - core use cases

Discover shadow coding assistants

Map every coding assistant on enterprise devices - Cursor, Claude Code, GitHub Copilot, Windsurf, Tabnine and more - whether used within a CLI, as an IDE plugin, in a desktop app, or online. Each asset is enriched with configuration, permissions, and MCP usage so teams can triage real exposure quickly.

MCP governance (build-time → runtime)

Enforce approved MCP servers and safe configurations. Prevent coding assistants from configuring unapproved MCP servers during development, and block dangerous runtime MCP invocations that could enable data exfiltration.

Prevent sensitive data leakage

Identify when a coding assistant is exposed to, as well as is about to share sensitive data or secrets (SSH keys, API tokens, .env values). Zenity can redact outputs, block a coding assistant call, or present in-context guidance to the developer - all in real time.

Secure against privilege escalation

Prevent high-risk scenarios when an attacker could proactively increase the agent’s permissions or lower its guardrails in order to perform more critical actions such accessing local sensitive files or invoking disallowed tools to exfiltrate data out.

Indirect prompt injection protection

Detect and neutralize indirect prompt injection vectors across tool outputs, package metadata, web searches, and code comments - anywhere instructions can be passed back to an assistant. This is critical for preventing poisoned suggestions and unauthorized agent behavior.

Build hard-boundary enforceable policies

Security teams can create guardrails that are actively enforced - not just logged. For example, require MCP allowlists at build time and enforce those same restrictions during runtime. Policies can be rolled out in Detect mode first, and then switched to Prevent mode with informative in-chat messages that preserve developer context.

A quick scenario

As previously demonstrated by Zenity, even legitimate tools (e.g Jira MCP) used by these coding assistants could be manipulated and cause coding assistants like Cursor to search for and exfiltrate sensitive secrets (e.g., JWT tokens or local credentials) from a repository or local file system without any user interaction.

Zenity can detect such high-risk scenarios and block the malicious MCP tool call to prevent the sensitive data exfiltration attempt - all while recording the incident and surfacing remediation steps to the security team.

Get started

Coding assistants are here to stay - but uncontrolled adoption is too risky for enterprises. Zenity’s expanded support for Cursor, Claude Code, and GitHub Copilot - among other coding assistants, gives teams the visibility, detection, and enforcement required to safely & quickly scale AI-driven development.

See it live and evaluate how build-time to runtime enforcement works in your environment.

Related blog posts

Seeing What AI Touches: Introducing Data Lens

Security teams are entering a new phase of risk driven by the combination of AI agents and broad access to internal...

Zenity 2025 Year in Review: Building AI Security for the Enterprise

For security teams, the adoption of agents showed up operationally before it showed up strategically - creating...

Your Browser is Becoming an Agent. Zenity Keeps It From Becoming a Threat.

Agentic browsers are quickly becoming part of everyday work. Tools like ATLAS, Comet, and Dia can read web content,...

Secure Your Agents

We’d love to chat with you about how your team can secure and govern AI Agents everywhere.

Get a Demo