Seeing What AI Touches: Introducing Data Lens

Security teams are entering a new phase of risk driven by the combination of AI agents and broad access to internal and external data. Agents are no longer limited to responding to prompts. They read files, pull documents from shared repositories, query external sources, and move information across systems on behalf of users.

This shift brings real business value. Knowledge becomes easier to access, workflows move faster, and information that once required deliberate effort can be surfaced instantly. At the same time, it introduces a challenge security teams have never had to manage at this scale.

AI agents do not simply access data. They make decisions about what data to use, when to use it, and how to present it. When those decisions play out across years of accumulated permissions, overshared content, and loosely governed repositories, risk compounds quickly. Sensitive information can spread across tools and users without any obvious signal that something has gone wrong.

Until now, security teams have lacked a reliable way to understand how AI actually interacts with data at runtime. They could see permissions and static classifications, but not which data agents were touching, how frequently, or how that access changed over time. That visibility gap is where risk quietly accumulates. Data Lens is designed to close it.

Why Agentic Data Visibility Matters

AI agents do more than answer questions. They read files, fetch documents, and move data between systems, often without direct user involvement.

This amplifies a problem enterprises have struggled with for decades: overshared sensitive data. Traditional approaches such as data labeling, classification, and DSPM were designed to understand data at rest. They help teams know what data exists and how it should be handled, but they were never built to capture how data is actually used once AI agents enter the picture.

What once required deliberate effort, browsing folders, and knowing where to look can now happen instantly through an AI assistant. Documents uploaded years ago to broadly shared repositories can be surfaced in response to simple prompts. Sensitive content such as performance reviews, financial data, and internal strategy files can appear without warning, exposing years of accumulated oversharing in seconds.

As agents become more capable, they do not just surface existing oversharing. They actively create new data movement by copying files, propagating content into responses, and spreading sensitive information across tools and users. Static labels and classifications do not change fast enough to reflect this behavior, and point-in-time scans cannot show how exposure evolves at runtime.

Data Lens is built to address this shift by making the data layer visible as it is actually touched and used by AI agents.

What Data Lens Is

Data Lens transforms runtime observability into agent-centric data insight.

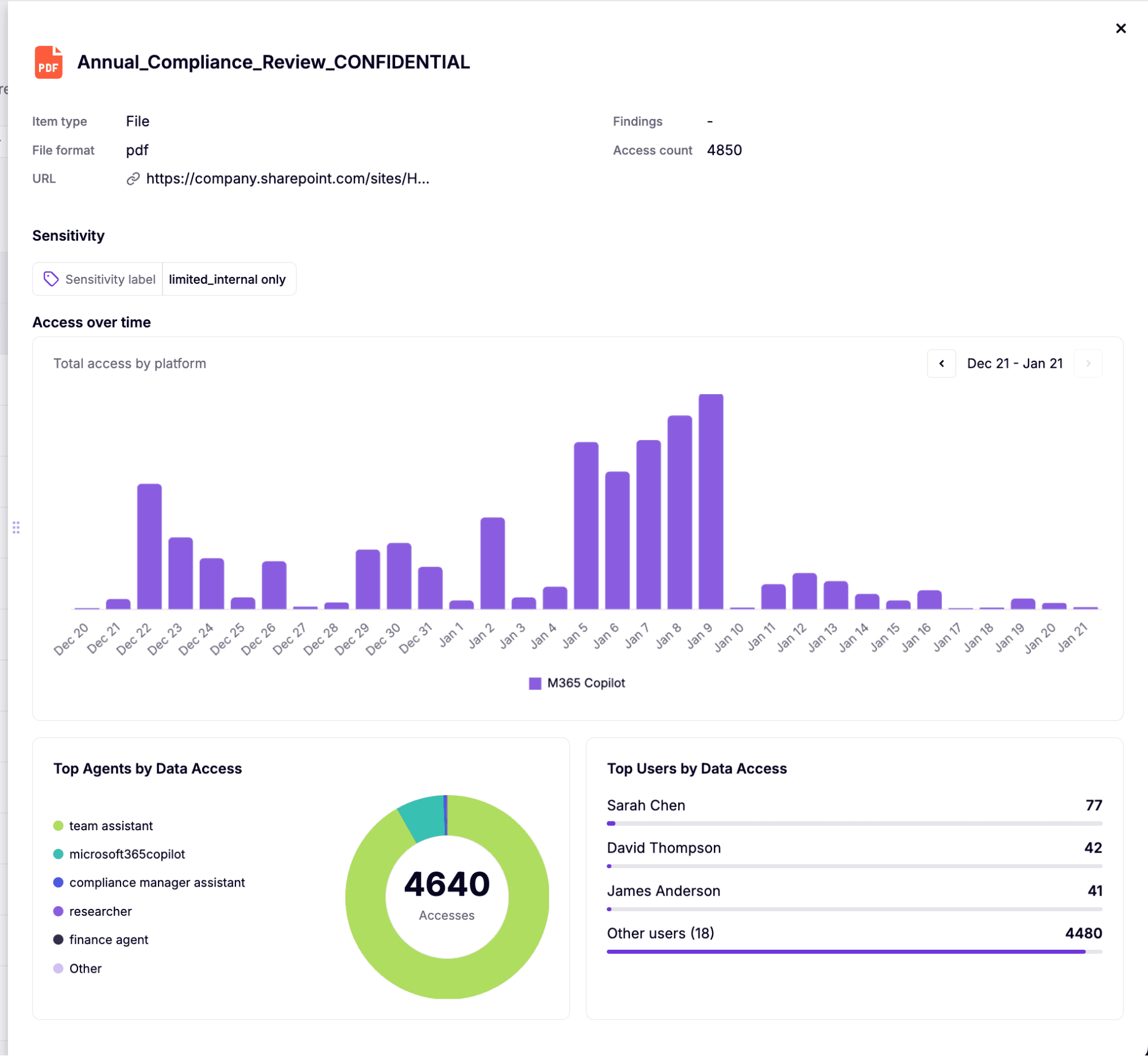

Instead of analyzing individual interactions in isolation, Data Lens aggregates AI activity and pivots the view around data items themselves. Files, documents, and web content become first-class objects that security teams can understand in the context of how agents and users interact with them.

This approach allows teams to move away from event-level noise and toward a clearer understanding of which data is most exposed, most accessed, and most likely to introduce risk as AI usage scales. Data Lens is a core capability within Zenity AI Observability, keeping data insight, agent behavior, and runtime findings connected in a single view instead of fragmented across tools.

Where Data Lens Fits in the Real World

AI Assistant Oversharing in Enterprise Content Repositories

Organizations deploying AI assistants across internal content repositories often discover that years of accumulated access permissions become exploitable through a single prompt. Data Lens shows which files AI agents are surfacing, how often, and to whom, giving security teams the context they need to prioritize remediation before unintended exposure turns into an incident.

Compliance and Audit Readiness

Regulated organizations need to demonstrate control over sensitive data. Data Lens provides a clear audit trail showing which AI agents accessed which data, when it happened, and which users were exposed. This supports audits and regulatory reviews without manual log reconstruction.

Faster Incident Response

When an incident occurs, responders need to understand the blast radius quickly. Data Lens shows what data an agent touched during a session, who was exposed, and whether the same data appeared across other AI services. Investigations that once took hours now start with a clear picture.

Working Alongside Existing Data Security Tools

Data Lens does not replace DSPM or classification tools. Its role is different.

Traditional data security tools focus on data at rest. Data Lens shows how data is actually used by AI agents at runtime. By correlating agent activity with sensitivity signals, Data Lens surfaces cases where sensitive data is accessed in unexpected ways, unlabeled data behaves like sensitive data, or overshared content becomes amplified through AI usage.

These insights feed back into existing workflows and help teams decide where to rescan, relabel, or tighten access.

Bringing Agentic Data Security Into Focus

AI agents are changing how data moves inside organizations. Traditional security tools were not designed to see this movement. They analyze static states, not autonomous behavior.

Data Lens gives security teams visibility into which data AI agents touch, how exposure spreads across platforms, and where risk concentrates. It turns raw activity into actionable intelligence and lays the groundwork for governing AI-driven data access at enterprise scale.

To see Data Lens in action, book a demo or connect with our team.

Related blog posts

From IDE to CLI: Securing Agentic Coding Assistants

Today we’re excited to announce that Zenity now protects the most powerful, enterprise-critical coding assistants...

Zenity 2025 Year in Review: Building AI Security for the Enterprise

For security teams, the adoption of agents showed up operationally before it showed up strategically - creating...

Your Browser is Becoming an Agent. Zenity Keeps It From Becoming a Threat.

Agentic browsers are quickly becoming part of everyday work. Tools like ATLAS, Comet, and Dia can read web content,...

Secure Your Agents

We’d love to chat with you about how your team can secure and govern AI Agents everywhere.

Get a Demo